Installation

How to and where install OpenServerless

Installation

Overview

If you are in hurry and you think this guide is TL;DR (too long, don’t

read), please read at least our Quick Start

single page installation guide.

It gives you an overview of the installation process, omitting some more

advanced details. It can be enough to get you started and install

OpenServerless.

Once you want to know more, you can come back.

If you instead want the read the full documentation first, please read

on.

Steps to follow

OpenServerless can be installed in many environments, using our powerful

command line interface ops.

So you should start downloading the CLI from this

page.

Once you installed ops, before installing you need to check the

prerequisites for the installation, and satisfy

them

If the the prerequisites are OK, you can make your choices of what you

want to Configure your OpenServerless

installation.

Finally, once you have:

downloaded ops

satisfied the prerequisites

configured your installation

you can choose where to install, either:

Post Installation

After the installation, you can change later the configuration and

update the system.

Support

If you have issues, please check:

1 - Quick Start

Fast path to install a self-hosted OpenServerless

Quick Start

This is a quick start guide to the installation process, targeting

experienced users in a hurry.

It provides a high-level overview of the installation process, omitting

advanced of details. The missing pieces are covered in the rest of the

documentation.

Of course, if this guide is not enough and things fail, you can always

apply the rule: “if everything fails, read the manual”.

Prerequisites

Start ensuring the prerequsites are satisfied:

Download and install ops, the

OpenServerless CLI, picking version suitable for your environment.

We support 64-bit versions of recent Windows, MacOS and major Linux

distributions.

Check that ops is correctly installed: open the terminal and write:

ops -info

Configure the services you want to enable. By default,

OpenServerless will install only the serverless engine, accessible

in http with no services enabled.

If you want to enable all the services, use:

ops config enable --all

otherwise pick the services you want, among --redis, --mongodb,

--minio, --cron, --postgres. Note that --mongodb is actually

FerretDB and requires Postgres which is

implicitly also enabled. More details here.

Now, choose where to install OpenServerless.

Your options are:

locally in your workstation;

in a Linux server in your intranet

in a Linux server available on Internet

in a Kubernetes cluster in your intranet

in cloud, where you can provision a Kubernetes

cluster

Local Installation

If you have a decent workstation (with at least 16GB of memory)

running a recent 64-bit operating system, you can install

Docker Desktop and

then install OpenServerless in it. Once you have:

installed the CLI

configured the services

installed Docker Desktop

Make sure Docker Desktop its running before the next operation. Install OpenServerless and its services in Docker with just this

command:

ops setup devcluster

Once it is installed, you can proceed to read the

tutorial to learn how to code with it.

NOTE: At least 16GB of memory is ideal, but if you know what you’re

doing and can tolerate inefficiency, you can install with less using:

export PREFL_NO_MEM_CHECK=1

export PLEFL_NO_CPU_CHECK=1

Internet Server Configuration

If you have access to a server on the Internet, you will know its IP

address.

Many cloud providers also give you a DNS name usually derived by the IP

and very hard to remember such as

ec2-12-34-56-78.us-west-2.compute.amazonaws.com.

Once you got the IP address and the DNS name, you can give to your

server a bettername using a domain name

provider.

We cannot give here precise instructions as there are many DNS providers

and each has different rules to do the setup. Check with your chosen

domain name provider.

If you have this name, configure it and enable DNS with:

ops config apihost <dns-name> --tls=<email-address>

❗ IMPORTANT

Replace the <dns-name> with the actual DNS name, without using prefixes like http:// or suffixes like :443. Also,

replace ` with your actual email address.

then proceed with the server installation.

Server Installation

Once you got access to a Linux server with:

An IP address or DNS name, referred to as <server>

Passwordless access with ssh to a Linux user <user>

At least 8GB of memory and 50GB of disk space available

The user <user> has passwordless sudo rights

The firewall that allows traffic to ports 80, 443 and 6443

Without any Docker or Kubernetes installed

Without any Web server or Web application installed

then you can install OpenServerless in it.

The server can be physical or virtual. We need Kubernetes in it but the

installer takes care of installing also a flavor of Kubernetes,

K3S, courtesy of

K3Sup.

To install OpenServerless, first check you have access to the server

with:

ssh <user>@<server> sudo hostname

You should see no errors and read the internal hostname of your server.

If you do not receive errors, you can proceed to install OpenServerless

with this command:

ops setup server <server> <user>

❗ IMPORTANT

Replace in the commands <server> with the address of your server, and

<user> with the actual user to use in your server. The <server> can

be the same as <dns-name> you have configured in the previous

paragraph, if you did so, or simply the IP address of a server on your

intranet.

Now wait until the installation completes. Once it is installed, you can

proceed to read the tutorial to learn how to

code with it.

Cloud Cluster Provisioning

If you have access to a cloud provider, you can set up a Kubernetes

cluster in it. The Kubernetes cluster needs to satisfy certain

prerequisites to be able to

install OpenServerless with no issues.

We provide the support to easily configure and install a compliant

Kubernetes cluster for the following clouds:

At the end of the installation you will have available and accessible a

Kubernetes Cluster able to install OpenServerless, so proceed with a

cluster installation.

Amazon AWS

Configure and install an Amazon EKS cluster on Amazon AWS with:

ops config eks

ops cloud eks create

then install the cluster.

Azure AKS

Configure and install an Azure AKS cluster on Microsoft Azure with:

ops config aks

ops cloud aks create

then install the cluster.

Google Cloud GKE

Configure and install a Google Cloud GKE with:

ops config gke

ops cloud gke create

then install the cluster.

Cluster Install

In short, if you have access to kubernetes cluster, you can install

OpenServerless with:

ops setup cluster

For a slightly longer discussion, checking prerequisites before

installing, read on.

Prerequisites to install

If you have access to a Kubernetes cluster with:

Access to the cluster-admin role

Block storage configured as the default storage class

The nginx-ingress installed

Knowledge of the IP address of your nginx-ingress controller

you can install OpenServerless in it. You can read more details

here.

You can get this access either by provisioning a Kubernetes cluster in

cloud or getting access to it from your system

administrator.

Whatever the way you get access to your Kubernetes cluster, you will end

up with a configuration file which is usually stored in a file named

.kube/config in your home directory. This file will give access to the

Kubernetes cluster to install OpenServerless.

To install, first, verify you have actually access to the Kubernetes

cluster, by running this command:

ops debug kube info

You should get information about your cluster, something like this:

Kubernetes control plane is running at

\https://api.nuvolaris.osh.n9s.cc:6443

Now you can finally install OpenServerless with the command:

ops setup cluster

Wait until the process is complete and if there are no errors,

OpenServerless is installed and ready to go.

Once it is installed, you can proceed to read the

Tutorial to learn how to code with it.

2 - Download

Download OpenServerless with ops CLI

Download and Install ops

What is ops?

As you can guess it helps with operations: ops is the OPenServerless CLI.

It is a task executor on steroids.

- it embeds task, wsk and a lot of other utility commands (check with ops -help)

- automatically download and update command line tools, prerequisites and tasks

- taskfiles are organized in commands and subcommands, hierarchically and are powered by docopt

- it supports plugins

The predefined set of tasks are all you need to install and manage an OpenServerless cluster.

Download links

You can install OpenServerless using its Command Line Interface, ops.

⚠ WARNING

Since we are in a preview phase, this is not an official link approved by the Apache Software Foundation.

Quick install in Linux, MacOS and Windows with WSL or GitBash:

curl -sL bit.ly/get-ops | bash

Quick install in Windows with PowerShell

irm bit.ly/get-ops-exe | iex

After the installation

Once installed, in the first run ops will tell to update the tasks

executing:

ops -update

This command updates the OpenServerless “tasks” (its internal logic) to the

latest version. This command should be also executed frequently, as the

tasks are continuously evolving and expanding.

ops will suggest when to update them (at least once a day).

You normally just need to update the tasks but sometimes you also need

to update ops itself. The system will detect when it is the case and

tell you what to do.

Where to find more details:

For more details, please visit the Github page of Openserverless Cli

3 - Prerequisites

Prerequisites to install OpenServerless

This page lists the prerequisites to install OpenServerless in various

environments.

You can install OpenServerless:

for development in a single node environment,

either in your local machine or in a Linux server.

for production, in a multi node environment

provided by a Kubernetes cluster.

Single Node development installation

For development purposes, you can install a single node

OpenServerless deployment in the following environments as soon as the

following requirements are satisfied:

Our installer can automatically install a Kubernetes environment, using

K3S, but if you prefer you can install a single-node

Kubernetes instance by yourself.

If you choose to install Kubernetes on your server, we provide support

for:

Multi Node production installation

For production purposes, you need a multi-node Kubernetes cluster

that satisfies those requirements,

accessible with its kubeconfig file.

If you have such a cluster, you can install

OpenServerless in a Kubernetes cluster

If you do not have a cluster and you need to setup one, we provide

support for provisioning a suitable cluster that satisfied our

requirements for the following Kubernetes environments:

Once you have a suitable Kubernetes cluster, you can proceed

installing OpenServerless.

3.1 - Local Docker

Install OpenServerless with Docker locally

Prerequisites to install OpenServerless with Docker

You can install OpenServerless on your local machine using Docker. This

page lists the prerequisits.

First and before all you need a computer with at least 16 GB of memory

and 30GB of available space.

❗ IMPORTANT

8GB are definitely not enough to run OpenServerless on your local

machine.

Furthermore, you need to install Docker. Let’s see the which one to

install and configure if you have:

- Windows

- MacOS

- Linux

Windows

You require the 64 bit edition in Intel Architecture of a recent version

of Windows (at least version 10). The installer ops does not run on 32

bit versions nor in the ARM architecture.

Download and install Docker

Desktop for Windows.

Once installed, you can proceed

configuring OpenServerless for the

installation.

MacOS

You require a recent version of MacOS (at least version 11.xb BigSur).

The installer ops is available both for Intel and ARM.

Download and install Docker

Desktop for MacOS.

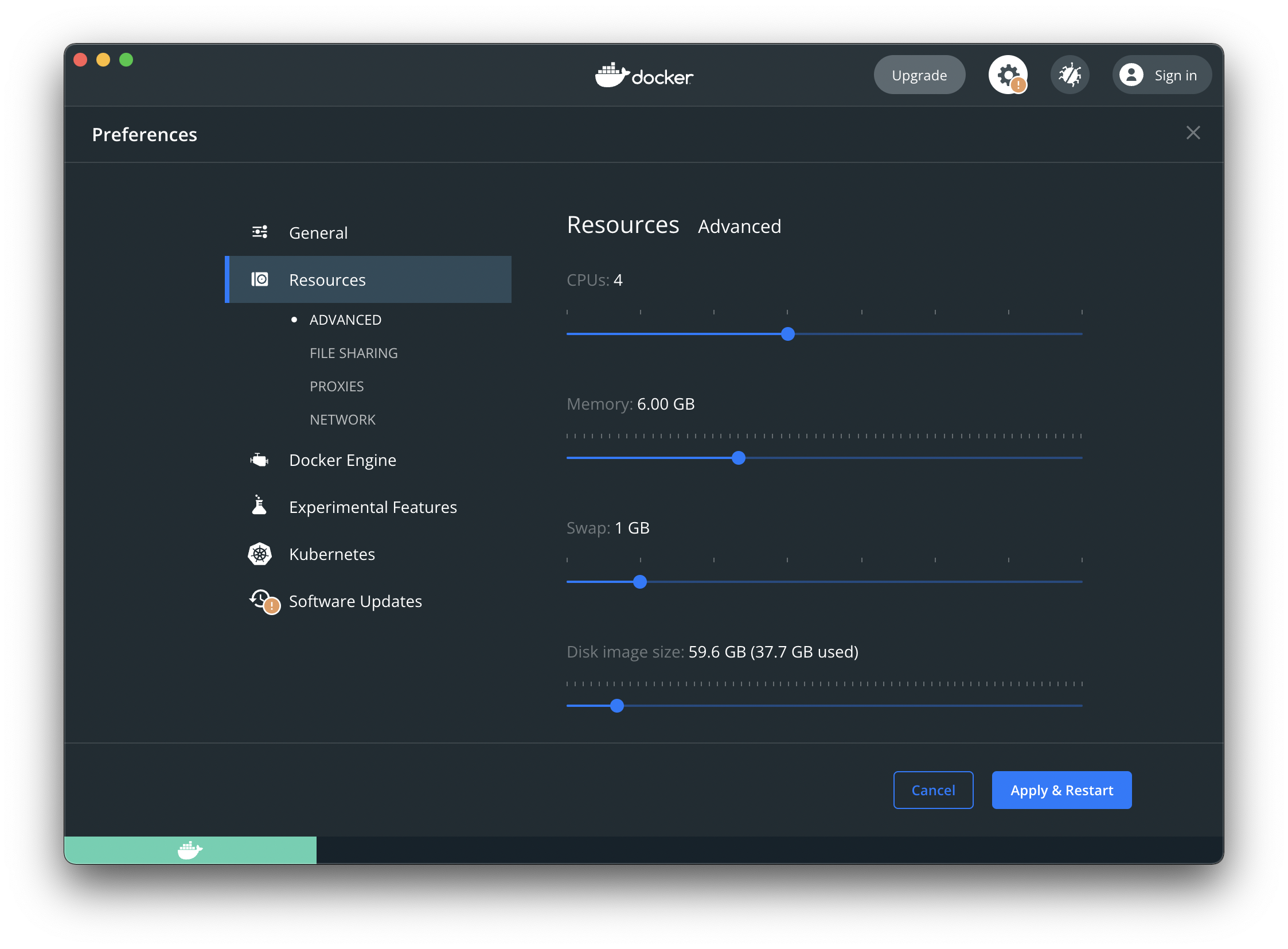

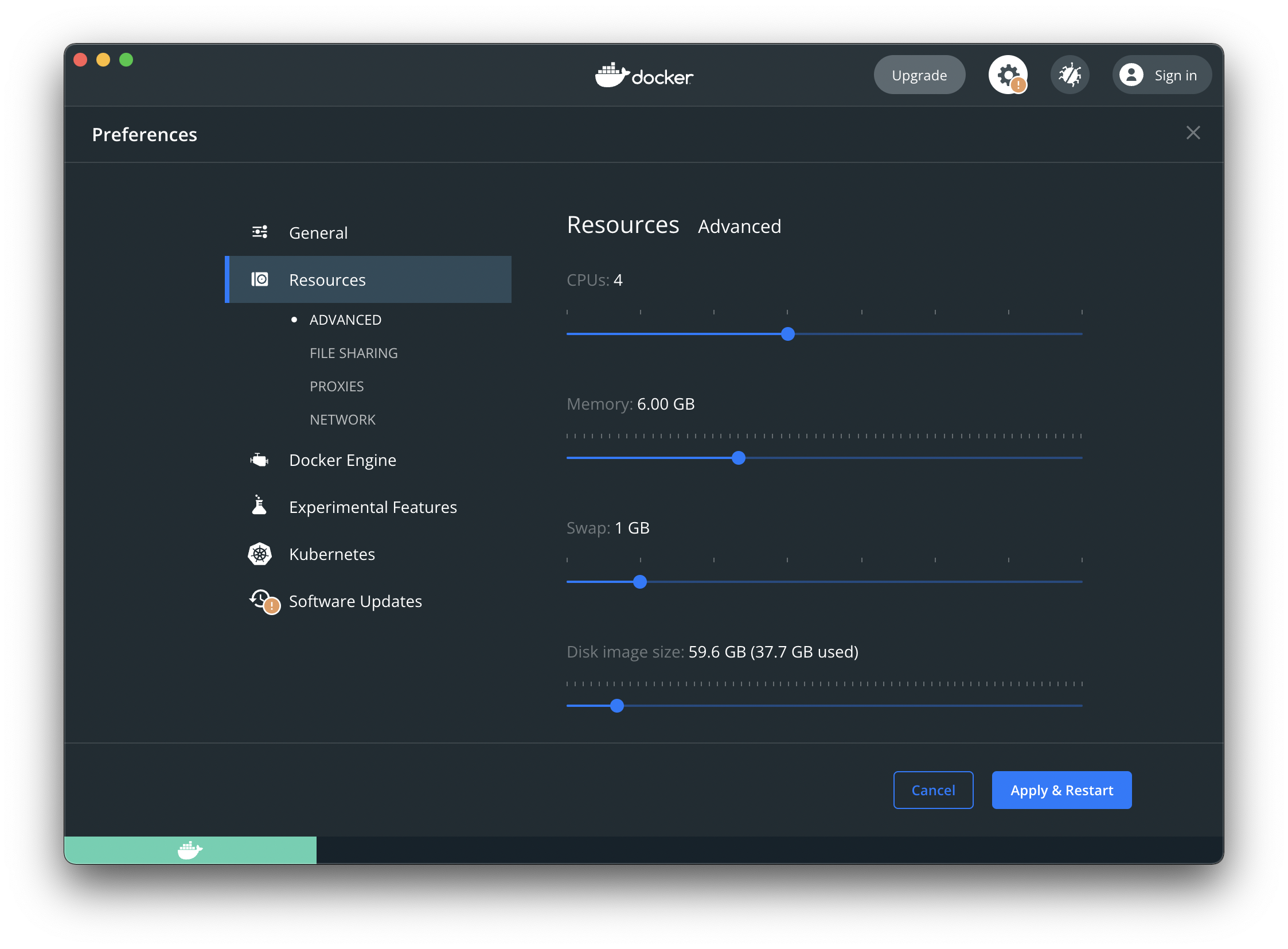

Since MacOS uses a virtual machine for Docker with a constrained memory.

you also need also to reserve at least 8GB.

❗ IMPORTANT

On MacOS, Docker defaults to 2GB memoery and they are definitely not enough to run

OpenServerless on your local machine.

Instructions to increase the memory reserved to Docker Desktopo on

MacOS:

Once installed, you can proceed

configuring OpenServerless for the installation.

Linux

Docker Desktop is available also on Linux, however we advice to install

instead the Server Docker

Engine

On Linux, the Docker Engine for the server does not run in a virtual

machine, so it is faster and uses less memory.

Once installed, you can proceed

configuring OpenServerless for the installation.

3.2 - Linux Server

Install OpenServerless in a Linux server

Prerequisites to install OpenServerless in a Linux server

You can install OpenServerless on any server either in your intranet or

on in Internet running a Linux distribution, with the following

requirements:

You know the IP address or DNS name of the server on Internet or

in your Intranet.

The server requires at least 8GB of memory and 30GB of disk space

available.

It should be running a Linux distribution supported by

K3S.

You must open the firewall to access ports 80, 443 and 6443 (for

K3S) or 16443 (for MicroK8S) from your machine.

You have to install a

public ssh key to access it

without a password.

You have to configure

sudo to execute root

commands without a password.

You can:

Once you have such a server you can optionally (it is not required)

install K3S or

MicroK8S in it.

Once you have configured you server, you can proceed

configuring OpenServerless for the installation.

3.2.1 - SSH and Sudo

General prerequisites to install OpenServerless

If you have access to a generic Linux server, to be able to install

OpenServerless it needs to:

be accessible without a password with ssh

be able to run root commands without a password with sudo

open the ports 80, 443 and 6443 or 16443

If your server does not already satisfy those requirements, read below

for information how to create a sshkey,

configure sudo and open the firewall

Installing a public SSH key

To connect to a server without a password using openssh (used by the

installer), you need a couple of files called ssh keys.

You can generate them on the command line using this command:

ssh-keygen

It will create a couple of files, typically called:

~/.ssh/id_rsa

~/.ssh/id_rsa.pub

where ~ is your home directory.

You have to keep secret the id_rsa file because it is the private key

and contains the information to identify you uniquely. Think to is as

your password.

You can copy the id_rsa.pub in the server or even share it publicly,

as it is the public key. Think to it as your login name, and adding this

file to the server adds you to the users who can login into it.

Once you have generated the public key, access your server, then edit

the file ~/.ssh/authorized_keys adding the public key to it.

It is just one line, contained in the id_rsa.pub file.

Create the file if it does not exist. Append the line to the file (as a

single line) if it already exists. Do not remove other lines if you do

not want to remove access to other users.

Configure Sudo

You normally access Linux servers using a user that is not root

(the system administrator with unlimited power on the system).

Depending on the system, the user to use to access be ubuntu,

ec2-user, admin or something else entirely. However if you have

access to the server, the information of which user to use should have

been provided, including a way to access to the root user.

You need to give this user the right to execute commands as root

without a password, and you do this by configuring the command sudo.

You usually have either access to root with the su command, or you can

execute sudo with a password.

Type either su or sudo bash to become root and edit the file

/etc/sudoers adding the following line:

<user> ALL=(ALL) NOPASSWD:ALL

where <user> is the user you use to log into the system.

Open the firewall

You need to open the following ports in the firewall of the server:

For information on how to open the firewall, please consult the

documentation of your cloud provider or contact your system

administrator, as there are no common procedures and they depends on the

cloud provider.

3.2.2 - Server on AWS

Prerequisites to install OpenServerless in AWS

Provision a Linux server in Amazon Web Services

You can provision a server suitable to install OpenServerless in cloud

provider Amazon Web Services

ops as follows:

install aws, the AWS CLI

get Access and Secret Key

configure AWS

provision a server

retrieve the ip address to configure a DNS name

Once you have a Linux server up and running you can proceed

configuring and

installing OpenServerless.

Installing the AWS CLI

Our cli ops uses under the hood the AWS CLI version

2,

so you need to dowload and install it following those

instructions.

Once installed, ensure it is available on the terminal executing the

following command:

aws --version

you should receive something like this:

aws-cli/2.9.4 Python/3.9.11 Linux/5.19.0-1025-aws exe/x86_64.ubuntu.22 prompt/off

Ensure the version is at least 2.

Getting the Access and Secret key

Next step is to retrieve credentials, in the form of an access key and a

secret key.

So you need to:

You will end up with a couple of string as follows:

Sample AWS Access Key ID: AKIAIOSFODNN7EXAMPLE Sample AWS Secret Access

Key: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

Take note of them as you need them for configuring out CLI.

Before you can provision a Linux server you have to configure AWS typing

the command:

ops config aws

The system will then ask the following questions:

*** Please, specify AWS Access Id and press enter.

AKIAIOSFODNN7EXAMPLE

*** Please, specify AWS Secret Key and press enter.

wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

*** Please, specify AWS Region to use and press enter.

To get a list of valid values use:

aws ec2 describe-regions --output table

Just press enter for default [us-east-1]:

*** Please, specify AWS public SSH key and press enter.

If you already have a public SSH key in AWS, provide its name here.

If you do not have it, generate a key pair with the following command:

ssh-keygen

The public key defaults to ~/.ssh/id_rsa.pub and you can import with:

aws ec2 import-key-pair --key-name nuvolaris-key --public-key-material --region=<your-region> fileb://~/.ssh/id_rsa.pub

Just press enter for default [devkit-74s]:

*** Please, specify AWS Image to use for VMs and press enter.

The suggested image is an Ubuntu 22 valid only for us-east-1

Please check AWS website for alternative images in other zones

Just press enter for default [ami-052efd3df9dad4825]:

*** Please, specify AWS Default user for image to use for VMs and press enter.

Default user to access the selected image.

Just press enter for default [ubuntu]:

*** Please, specify AWS Instance type to use for VMs and press enter.

The suggested instance type has 8GB and 2vcp

To get a list of valid values, use:

aws ec2 describe-instance-types --query 'InstanceTypes[].InstanceType' --output table

Just press enter for default [t3a.large]:

*** Please, specify AWS Disk Size to use for VMs and press enter.

Just press enter for default [100]:

Provision a server

You can provision one or more servers using ops. The servers will use

the parameters you have just configured.

You can create a new server with:

ops cloud aws vm-create <server-name>

❗ IMPORTANT

Replace <server-name> with a name you choose, for example

ops-server

The command will create a new server in AWS with the parameters you

specified in configuration.

You can also:

list servers you created with ops cloud aws vm-list

delete a server you created and you do not need anymore with

ops cloud aws vm-delete <server-name>

Retrieve IP

The server will be provisioned with an IP address assigned by AWS.

You can read the IP address of your server with

ops cloud aws vm-getip <server-name>

You need this IP when configuring a DNS name for

the server.

3.2.3 - Server on Azure

Prerequisites to install OpenServerless in Azure

You can provision a server suitable to install OpenServerless in cloud

provider Azure

ops as follows:

install az, the Azure CLI

get Access and Secret Key

configure Azure

provision a server

retrieve the ip address to configure a DNS name

Once you have a Linux server up and running you can proceed

configuring and

installing OpenServerless.

Installing the Azure CLI

Our cli ops uses under the hood the az,

command so you need to dowload and install it following those

instructions.

Once installed, ensure it is available on the terminal executing the

following command:

az version

you should receive something like this:

{

"azure-cli": "2.64.0",

"azure-cli-core": "2.64.0",

"azure-cli-telemetry": "1.1.0",

"extensions": {

"ssh": "2.0.5"

}

}

Ensure the version is at least 2.64.0

Connect a subscription

Next step is to connect az to a valid Azure subscription. Azure

supports several authentication methods: check

which one you prefer.

The easiest is the one described in Sign in interactively:

az login

This will open a browser and you will asked to login to you azure account. Once logged in, the az command will be

automatically connected to the choosen subscription.

To check if the az command is properly connected to your subscription, check the output of this command:

$ az account list --query "[].{subscriptionId: id, name: name, user: user.name}" --output table

SubscriptionId Name User

------------------------------------ --------------------------- -------------------------

xxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx Microsoft Azure Sponsorship openserverless@apache.org

Before you can provision a Linux server you have to configure Openserverless for Azure typing

the command:

ops config azcloud

The system will then ask the following questions:

*** Please, specify Azure Project Id and press enter.

Azure Project Id: openserverless-k3s

*** Please, specify Azure Zone and press enter.

To get a list of valid values use:

az account list-locations -o table

Just press enter for default [eastus]:

Azure Zone:

*** Please, specify Azure virtual machine type and press enter.

To get a list of valid values, use:

az vm list-sizes --location <location> -o table

where <location> is your current location.

Just press enter for default [Standard_B4ms]:

Azure virtual machine type:

*** Please, specify Azure vm disk size in gigabyte and press enter.

Just press enter for default [50]:

Azure vm disk size in gigabyte:

*** Please, specify Azure Cloud public SSH key and press enter.

If you already have a public SSH key provide its path here. If you do not have it, generate a key pair with the following command:

ssh-keygen

The public key defaults to ~/.ssh/id_rsa.pub.

Just press enter for default [~/.ssh/id_rsa.pub]:

Azure Cloud public SSH key:

*** Please, specify Azure Cloud VM image and press enter.

Just press enter for default [Ubuntu2204]:

Azure Cloud VM image:

Provision a server

You can provision one or more servers using ops. The servers will use

the parameters you have just configured.

You can create a new server with:

ops cloud azcloud vm-create <server-name>

❗ IMPORTANT

Replace <server-name> with a name you choose, for example

ops-server

The command will create a new server in Azure Cloud with the parameters

you specified in configuration.

You can also:

list servers you created with ops cloud azcloud vm-list

delete a server you created and you do not need anymore with

ops cloud azcloud vm-delete <server-name>

Retrieve IP

The server will be provisioned with an IP address assigned by Azure

Cloud.

You can read the IP address of your server with

ops cloud azcloud vm-getip <server-name>

You need this IP when configuring a DNS name for

the server.

3.2.4 - Install K3S

Prerequisites to install OpenServerless in K3S

Install K3S in a server

You can install OpenServerless as described

here, and you do not need to

install any Kubernetes in it, as it is installed as part of the

procedure. In this case it installs K3S.

Or you can install K3S in advance, and then proceed

configuring and then installing

OpenServerless as in any other Kubernetes

cluster.

Installing K3S in a server

Before installing ensure you have satified the

prerequisites, most notably:

you know the IP address or DNS name

your server operating system satisfies the K3S

requirements

you have passwordless access with ssh

you have a user with passwordless sudo rights

you have opened the port 6443 in the firewall

Then you can use the following subcommand to install in the server:

ops cloud k3s create <server> [<username>]

where <server> is the IP address or DNS name to access the server,

and the optional <username> is the user you use to access the server:

if is not specified, the root username will be used.

Those pieces of information should have been provided when provisioning

the server.

❗ IMPORTANT

If you installed a Kubernetes cluster in the server this way, you should

proceed installing OpenServerless as in

a Kubernetes cluster, not

as a server.

The installation retrieves also a Kubernetes configuration file, so you

can proceed to installing it without any other step involved.

Additional Commands

In addition to create the following subcommands are also available:

ops cloud k3s delete <server> [<username>]:

uninstall K3S from the server

ops cloud k3s kubeconfig <server> [<username>]:

retrieve the kubeconfig from the K3S server

ops cloud k3s info: some information about the server

ops cloud k3s status: status of the server

3.2.5 - Install MicroK8S

Prerequisites to install OpenServerless in K8S

Install MicroK8S in a server

You can install OpenServerless as

described here and you do not need to

install any Kubernetes in it, as it is installed as part of the procedure. In

this case it installs K3S.

But you can install MicroK8S instead, if you

prefer. Check here for informations about MicroK8S.

If you install MicroK8S in your server, you can then proceed

configuring and then installing OpenServerless

as in any other Kubernetes cluster.

Installing MicroK8S in a server

Before installing ensure you have

satisfied the prerequisites, most notably:

you know the IP address or DNS name

you have passwordless access with ssh

you have an user with passwordless sudo rights

you have opened the port 16443 in the firewall

Furthermore, since MicroK8S is installed using snap, you also need to

install snap.

💡 NOTE

While snap is available for many linux distributions, it is typically

pre-installed and well supported in in Ubuntu and its derivatives. So we

recommend MicroK8S only if you are actually using an Ubuntu-like Linux

distribution.

If you system is suitable to run MicroK8S you can use the following

subcommand to install in the server:

ops cloud mk8s create SERVER=<server> USERNAME=<username>

where <server> is IP address or DNS name to access the server, and

<username> is the user you use to access the server.

Those informations should have been provided when provisioning the

server.

❗ IMPORTANT

If you installed a Kubernetes cluster in the server in this way, you

should proceed installing OpenServerless as in

a Kubernetes cluster, not as a server.

The installation retrieves also a kubernets configuration file so you

can proceed to installing it without any other step involved.

Additional Commands

In addition to create you have available also the following

subcommands:

ops cloud mk8s delete SERVER=<server> USERNAME=<username>:

uninstall K3S from the server

ops cloud mk8s kubeconfig SERVER=<server> USERNAME=<username>:

retrieve the kubeconfig from the MicroK8S server

ops cloud mk8s info: informations about the server

ops cloud mk8s status: status of the server

3.3 - Kubernetes Cluster

Install OpenServerless in a Kubernetes cluster

Prerequisites to install OpenServerless in a Kubernetes cluster

You can install OpenServerless in any Kubernetes cluster which

satisfy some requirements.

Kubernetes clusters are available pre-built from a variety of cloud

providers. We provide with our ops tool the commands to install a

Kubernetes cluster ready for OpenServerless in the following

environments:

You can also provision a suitable cluster by yourself, in any cloud or

on premises, ensuring the prerequites are satisfied.

Once provisioned, you will receive a configuration file to access the

cluster, called kubeconfig.

This file should be placed in ~/.kube/config to give access to the

cluster

If you have this file, you can check if you have access to the cluster

with the command:

ops debug kube info

You should see something like this:

Kubernetes control plane is running at https://xxxxxx.yyy.us-east-1.eks.amazonaws.com

Once you have got access to the Kubernetes cluster, either installing

one with out commands or provisioning one by yourself, you can proceed

configuring the installation and then

installing OpenServerless in the

cluster.

3.3.1 - Amazon EKS

Prerequisites for Amazon EKS

Prerequisites to install OpenServerless in an Amazon EKS Cluster

Amazon EKS is a pre-built Kubernetes

cluster offered by the cloud provider Amazon Web

Services.

You can create an EKS Cluster in Amazon AWS for installing using

OpenServerless using ops as follows:

install aws, the AWS CLI

get Access and Secret Key

configure EKS

provision EKS

optionally, retrieve the load balancer address to

configure a DNS name

Once you have EKS up and running you can proceed

configuring and installing

OpenServerless.

Installing the AWS CLI

Our cli ops uses under the hood the AWS CLI version

2,

so you need to dowload and install it following those

instructions.

Once installed, ensure it is available on the terminal executing the

following command:

aws --version

you should receive something like this:

aws-cli/2.9.4 Python/3.9.11 Linux/5.19.0-1025-aws exe/x86_64.ubuntu.22 prompt/off

Ensure the version is at least 2.

Getting the Access and Secret key

Next step is to retrieve credentials, in the form of an access key and a

secret key.

So you need to: * access the AWS console following those

instructions

create an access key and secret key, * give to the credentials the

minimum required permissions as described

here to build an EKS

cluster.

You will end up with a couple of string as follows:

Sample AWS Access Key ID: AKIAIOSFODNN7EXAMPLE Sample AWS Secret Access

Key: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

Take note of them as you need them for configuring out CLI.

Once you have the access and secret key you can configure EKS with the

command ops config eks answering to all the questions, as in the

following example:

$ ops config eks

*** Please, specify AWS Access Id and press enter.

AKIAIOSFODNN7EXAMPLE

*** Please, specify AWS Secret Key and press enter.

wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

*** Please, specify AWS Region to use and press enter.

To get a list of valid values use:

aws ec2 describe-regions --output table

Just press enter for default [us-east-2]:

*** Please, specify AWS public SSH key and press enter.

If you already have a public SSH key in AWS, provide its name here.

If you do not have it, generate a key pair with the following command:

ssh-keygen

The public key defaults to ~/.ssh/id_rsa.pub and you can import with:

aws ec2 import-key-pair --key-name nuvolaris-key --public-key-material --region=<your-region> fileb://~/.ssh/id_rsa.pub

Just press enter for default [nuvolaris-key]:

*** Please, specify EKS Name for Cluster and Node Group and press enter.

Just press enter for default [nuvolaris]:

*** Please, specify EKS region and press enter.

To get a list of valid values use:

aws ec2 describe-regions --output table

Just press enter for default [us-east-1]:

*** Please, specify EKS number of worker nodes and press enter.

Just press enter for default [3]:

*** Please, specify EKS virtual machine type and press enter.

To get a list of valid values, use:

aws ec2 describe-instance-types --query 'InstanceTypes[].InstanceType' --output table

Just press enter for default [m5.xlarge]:

*** Please, specify EKS disk size in gigabyte and press enter.

Just press enter for default [50]:

*** Please, specify EKS Kubernetes Version and press enter.

Just press enter for default [1.25]:

Provisioning Amazon EKS

Once you have configured it, you can create the EKS cluster with the

command:

ops cloud eks create

It will take around 20 minutes to be ready. Please be patient.

At the end of the process, you will have access directly to the created

Kubernetes cluster for installation.

Retrieving the Load Balancer DNS name

Once the cluster is up and running, you need to retrieve the DNS name of

the load balancer.

You can read this with the command:

ops cloud eks lb

Take note of the result as it is required for

configuring a dns name for your cluster.

Additional Commands

You can delete the created cluster with: ops cloud eks delete

You can extract again the cluster configuration, if you lose it,

reconfiguring the cluster and then using the command

ops cloud eks kubeconfig.

3.3.2 - Azure AKS

Prerequisites for Azure AKS

Prerequisites to install OpenServerless in an Azure AKS Cluster

Azure AKS is a pre-built Kubernetes

cluster offered by the cloud provider Microsoft Azure.

You can create an AKS Cluster in Microsoft Azure for installing using

OpenServerless using ops as follows:

install az, the Azure CLI

configure AKS

provision AKS

optionally, retrieve the load balancer address to

configure a DNS name

Once you have AKS up and running you can proceed

configuring and installing

OpenServerless.

Installing the Azure CLI

Our CLI ops uses under the hood the Azure

CLI, so you need to

dowload and install it

following those instructions.

Once installed, ensure it is available on the terminal executing the

following command:

az version

you should receive something like this:

{

"azure-cli": "2.51.0",

"azure-cli-core": "2.51.0",

"azure-cli-telemetry": "1.1.0",

"extensions": {}

}

Before provisioning your AKS cluster you need to configure AKS with the

command ops config aks answering to all the questions, as in the

following example:

$ ops config aks

*** Please, specify AKS Name for Cluster and Resource Group and press enter.

Just press enter for default [nuvolaris]:

*** Please, specify AKS number of worker nodes and press enter.

Just press enter for default [3]:

*** Please, specify AKS location and press enter.

To get a list of valid values use:

az account list-locations -o table

Just press enter for default [eastus]:

*** Please, specify AKS virtual machine type and press enter.

To get a list of valid values use:

az vm list-sizes --location <location> -o table

where <location> is your current location.

Just press enter for default [Standard_B4ms]:

*** Please, specify AKS disk size in gigabyte and press enter.

Just press enter for default [50]:

*** Please, specify AKS public SSH key in AWS and press enter.

If you already have a public SSH key provide its path here. If you do not have it, generate a key pair with the following command:

ssh-keygen

The public key defaults to ~/.ssh/id_rsa.pub.

Just press enter for default [~/.ssh/id_rsa.pub]:

Provisioning Azure AKS

Once you have configured it, you can create the AKS cluster with the

command:

ops cloud aks create

It will take around 10 minutes to be ready. Please be patient.

At the end of the process, you will have access directly to the created

Kubernetes cluster for installation.

Retrieving the Load Balancer DNS name

Once the cluster is up and running, you need to retrieve the DNS name of

the load balancer.

You can read this with the command:

ops cloud aks lb

Take note of the result as it is required for

configuring a dns name for your cluster.

Additional Commands

You can delete the created cluster with: ops cloud aks delete

You can extract again the cluster configuration, if you lose it,

reconfiguring the cluster and then using the command

nuv cloud aks kubeconfig.

3.3.3 - Generic Kubernetes

Prerequisites for all Kubernetes

Kubernetes Cluster requirements

OpenServerless installs in any Kubernetes cluster which satisfies the

following requirements:

cluster-admin access

at least 3 worker nodes with 4GB of memory each

support for block storage configured as default storage class

support for LoadBalancer services

the nginx ingress

already installed

the cert manager already installed

Once you have such a cluster, you need to retrieve the IP address of the

Load Balancer associated with the Nginx Ingress. In the default

installation, it is installed in the namespace nginx-ingress and it is

called ingress-nginx-controller.

In the default installation you can read the IP address with the

following command:

kubectl -n ingress-nginx get svc ingress-nginx-controller

If you have installed it in some other namespace or with another name,

change the command accordingly.

The result should be something like this:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.0.9.99 20.62.156.19 80:30898/TCP,443:31451/TCP 4d1h

Take note of the value under EXTERNAL-IP as you need it in the next

step of installation, configuring DNS.

4 - Configure OpenServerless

Configuring OpenServerless Installation

This section guides configuring the OpenServerless installation.

Note that you can also skip this configuration, and install

OpenServerless without any configuration.

Once you configure the installation, you can proceed to

Install OpenServerless.

You can then reconfigure the system later.

Minimal Configuration

Without any configuration, you get a minimal OpenServerless:

You can:

4.1 - DNS and SSL

Configuring DNS and SSL

Configuring DNS and SSL

You can use OpenServerless as just as a serverless engine, and use the

default IP or DNS provided when provisioned your server or cluster. If

you do so, only http is avaialble, and it is not secure.

If you want your server or cluster is available with a well-known

internet name, you can associate the IP address or the “ugly”

default DNS name of serveres or clusters to a DNS name of your choice,

to use it also to publish the static front-end of your server.

Furthermore, once you decided for a DNS name for your server, you can

enable the provisioning of an SSL certificate so you server will be

accessible with https.

In order to configure the DNS and the SSL the steps are:

retrieve the IP address or the the DNS name of your

server or cluster

register a DNS name of your choice with your

registration name provider

configure OpenServerless so he knows of the DNS and

SSL and can use it

Retrieving the IP address or the DNS name

If OpenServerless is installed in your local machine with Docker, cannot

configure any DNS nor SSL, so you can proceed configuring the

services.

If OpenServerless is installed in a single server, after you

satisfied the server prerequisites you will

know the IP address or DNS name of you server.

If OpenServerless is installed in a Kubernetes cluster, after you

satisfied the server cluster prerequisites

you know either the IP address or the DNS name of the load balancer.

Register a DNS name or wildcard

Using the address of your server or cluster, you need either to

configure a DNS name your already own or contact a domain name

registrar to register a

new DNS name dedicated to your server or cluster.

You need at least one DNS name in a domain you control, for example

nuvolaris.example.com that points to you IP or address.

Note that:

If you have an IP address to your load balancer you need to

configure an A record mapping nuvolaris.example.com to the IP

address of your server.

If you have a DNS name to your load balancer, you need to configure

a CNAME record mapping nuvolaris.example.com to the DNS name of

your server.

💡 NOTE

If you are registering a dedicated domain name for your cluster, you are

advised to register wildcard name (*) for every domain name in

example.com will resolve to your server.

Registering a wildcard is required to get a different website for for

multiple users.

Once you registrered a single DNS (for example openserverless.example.com)

or a wildcard DNS name (for example *.example.com) you can communicate

to the installer what is the main DNS name of your cluster or server, as

it is not able to detect it automatically. We call this the <apihost>

💡 NOTE

If you have registered a single DNS name, like openserverless.example.com

use this name as <apihost>.

If you have registered a wildcard DNS name, you have to choose a DNS

name to be used as <apihost>.

We recommended you use a name starting with api since to avoid

clashes, user and domain names starting with api are reserved. So if

you have a *.example.com wildcard DNS available, use api.example.com

as your <apihost>

Once you decided what is your API host, you can configure this as

follows:

ops config apihost <apihost>

This configuration will assign a well know DNS name as access point of

your OpenServerless cluster. However note it does NOT enable SSL.

Accessing to your cluster will happen using HTTP.

Since requests contain sensitive information like security keys, this is

highly insecure. You hence do this only for development or testing

but never for production.

Once you have a DNS name, enabling https is pretty easy, since we can

do it automatically using the free service Let's Encrypt. We have

however to provide a valid email address <email>.

Once you know your <apihost> and the <email> to receive

communications from Let’s Encrypt (mostly, when a domain name is

invalidated and needs to be renewed), you can configure your apihost and

enable SSL as follows:

ops config apihost <apihost> --tls=<email>

Of course, replace the <apihost> with the actual DNS name you

registered, and <email> with your email address

4.2 - Services

Configure OpenServerless services

Configuring OpenServerless services

After you satisfied the prerequisites and

before you actually install OpenServerless, you

have to select which services you want to install:

Static, publishing of static assets

Redis, a storage service

MinIO an object storage service

Postgres a relational SQL database

FerretDB A MongoDB-compatible adapter for Postgres

You can enable all the services with:

ops config enable --all

or disable all of them with:

ops config disable --all

Or select the services you want, as follows.

Static Asset Publishing

The static service allows you to publish static asset.

💡 NOTE

you need to setup a a wildcard DNS name

to be able to access them from Internet.

You can enable the Static service with:

ops config enable --static

and disable it with:

ops config disable --static

Redis

Redis, is a fast, in-memory key-value store, usually

used as cache, but also in some cases as a (non-relational) database.

Enable REDIS:

ops config enable --redis

Disable REDIS:

ops config disable --redis

MinIO

MinIO is an object storage service

Enable minio:

ops config enable --minio

Disable minio:

ops config disable --minio

Postgres

Postgres is an SQL (relational) database.

Enable postgres:

ops config enable --postgres

Disable postgres:

ops config disable --postgres

FerretDB

FerretDB is a MongoDB-compatible adapter for

Postgres. It created a document-oriented database service on top of

Postgres.

💡 NOTE

Since FerretDB uses Postgres as its storage, if you enable it, also the

service Postgresql will be enabled as it is required.

Enable MongoDB api with FerretDB:

ops config enable --mongodb

Disable MongoDB api with FerretDB:

ops config disable --mongodb

5 - Install OpenServerless

Installation Overview

This page provides an overview of the installation process.

Before installation

Please ensure you have:

Core Installation

Once you have completed the preparation steps, you can proceed with:

💡 NOTE

The install process will notify nuvolaris creators with the type of installation (for example: clustered or server installation), no other info will be submitted. If you want to disable the notification, you can execute the following command before the setup command:

ops -config DO_NOT_NOTIFY_NUVOLARIS=1

Post installation

After the installation, you can consult the development guide

for informations how to reconfigure and update the system.

Support

If something goes wrong, you can check:

5.1 - Docker

Install OpenServerless on a local machine

Local Docker installation

This page describes how to install OpenServerless on your local machine. The

services are limited and not accessible from the outside so it is an

installation useful only for development purposes.

Prerequisites

Before installing, you need to:

💡 NOTE

The static service works perfectly for the default namespace nuvolaris which is linking the http://localhost to the

nuvolaris web bucket. With this setup adding new users will add an ingress with host set to

namespace.localhost, that in theory could also work if the host file of the development machine is configured

to resolve it to the 127.0.0.1 ip address.

⚠ WARNING

You cannot have https in a local installation.

If you enable it, the configuration will be ignored.

Installation

Run the commands:

- Minimal configuration

Behind the scene, this command will write a cluster configuration file called ~/.ops/config.json activating these

services: static, redis, postgres, ferretdb, minio, cron constituting the common baseline for development

tasks.

- Setup the cluster

and wait until the command terminates.

Click here to see a log sample of the setup

ops setup devcluster

Creating cluster "nuvolaris" ...

✓ Ensuring node image (kindest/node:v1.25.3) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

✓ Waiting ≤ 1m0s for control-plane = Ready ⏳

• Ready after 1s 💚

Set kubectl context to "kind-nuvolaris"

You can now use your cluster with:

kubectl cluster-info --context kind-nuvolaris --kubeconfig /Users/bruno/.ops/tmp/kubeconfig

Thanks for using kind!

[...continue]

💡 NOTE

The log will continue because, after kind is up and running, OpenServerless namespace and relative services are

installed inside.

It will take some minute to complete, so be patient.

Troubleshooting

Usually the setup completes without errors.

However, if ops is unable to complete the setup, you may see this message at the end:

ops: Failed to run task "create": exit status 1

task execution error: ops: Failed to run task "create": exit status 1

ops: Failed to run task "devcluster": exit status 1

task execution error: ops: Failed to run task "devcluster": exit status 1

If this is your case, try to perform a uninstall / reinstall:

ops setup cluster --uninstall

ops config reset

ops config minimal

ops setup devcluster

If this will not solve, please contact the community.

Post install

Check the tutorial to learn how to use it.

Uninstall

To uninstall you may:

Uninstall devcluster

This will actually remove the ops namespace and all the services from kind.

Useful to re-try an installation when something gone wrong.

ops setup cluster --uninstall

ops config reset

Remove devcluster

This will actually remove the nodes from kind:

ops setup devcluster --uninstall

5.2 - Linux Server

Install on a Linux Server

Server Installation

This page describes how to install OpenServerless on a Linux server

accessible with SSH.

This is a single node installation, so it is advisable only for

development purposes.

Prerequisites

Before installing, you need to:

install the OpenServerless CLI ops;

provision a server running a Linux operating system,

either a virtual machine or a physical server, and you know its IP address

or DNS name;

configure it to have passwordless ssh access and sudo rights;

open the firewall to have access to ports 80, 443 and 6443 or 16443

from your client machine;

configure the DNS name for the server and choose

the services you want to enable;

Installation

If the prerequisites are satisfied, execute the dommand:

ops setup server <server> <user>

❗ IMPORTANT

Replace in the command before <server> with the IP address or DNS name

used to access the server, and <user> with the username you have to

use to access the server

Wait until the command completes and you will have OpenServerless up and

running.

Post Install

ops setup server <server> <user> --uninstall

5.3 - Kubernetes cluster

Install OpenServerless on a Kubernetes Cluster

Cluster Installation

This section describes how to install OpenServerless on a Kubernetes Cluster

Prerequisites

Before installing, you need to:

Installation

If you have a Kubernetes cluster directly accessible with its

configuration, or you provisioned a cluster in some cloud using ops

embedded tools, you just need to type:

ops setup cluster

Sometimes the kubeconfig includes access to multiple Kubernetes

instances, each one identified by a different <context> name. You can

install the OpenServerless cluster in a specified <context> with:

ops setup cluster <context>

Post Install

ops setup cluster --uninstall

6 - Troubleshooting

How to diagnose and solve issues

Debug

This document gives you hints for diagnostics and solving issues, using

the (hidden) subcommand debug.

Note it is technical and assumes you have some knowledge of how

Kubernetes operates.

Watching

While installing, you can watch the installation (opening another

terminal) with the command:

ops debug watch

Check that no pods will go in error while deploying.

Configuration

You can inspect the configuration with the ops debug subcommand

API host: ops debug apihost

Static Configuration: ops debug config.

Current Status: ops debug status

Runtimes: ops debug runtimes

Load Balancer: ops debug lb

Images: ops debug images

Logs

You can inspect logs with ops debug log subcommand. Logs you can show:

operator: ops debug log operator (continuously:

ops debug log foperator)

controller: ops debug log controller (continuously:

ops debug log fcontroller)

database: ops debug log couchdb (continuously:

ops debug log fcouchdb)

certificate manager: ops debug log certman

(continuously: ops debug log fcertmap)

Kubernetes

You can detect which Kubernetes are you using with:

ops debug detect

You can then inspect Kubernetes objects with:

namespaces: ops debug kube ns

nodes: ops debug kube nodes

pod: ops debug kube pod

services: ops debug kube svc

users: ops debug kube users

You can enter a pod by name (use kube pod to find the name) with:

ops debug kube exec P=<pod-name>

Kubeconfig

Usually, ops uses a hidden kubeconfig so does not override your

Kubernetes configuration.

If you want to go more in-depth and you are knowledgeable of Kubernetes,

you can export the kubeconfig with ops debug export F=<file>.

You can overwrite your kubeconfig (be aware there is no backup) with

ops debug export F=-.