Docs

About Apache OpenServerless

Apache OpenServerless is an Open Source project, released under the

Apache License

2.0

providing a portable and complete

Serverless

environment, allowing to build quickly and easily cloud-native

applications.

Our goal is to make OpenServerless ubitiquous, allowing it to easily

run a complete and portable environment that runs in every

Kubernetes.

OpenServerless is based on Apache

OpenWhisk, which provides a powerful,

production-ready serverless engine.

However, the serverless engine is just the beginning, because a

serverless environment requires a set of integrated services.

OpenServerless provides integrated with OpenWhisk several additional

services such as databases, object storage, and a cron scheduler.

Furthermore, we test it on many public cloud Kubernetes services and

on-premises Kubernetes vendors.

The platform is paired with a powerful CLI tool, ops, which lets you

deploy OpenServerless quickly and easily everywhere, and perform a lot

of development tasks.

Our goal is to build a complete distribution of a serverless

environment with the following features:

It is easy to install and manage.

Integrates all the key services to build applications.

It is as portable as possible to run potentially in every

Kubernetes.

It is however tested regularly against a set of supported Kubernetes

environments.

If you want to know more about our goals, check our

roadmap

document.

1 - Tutorial

Showcase serverless development in action

Tutorial

This tutorial walks you through developing a simple OpenServerless

application using the Command Line Interface (CLI) and Javascript (but

any supported language will do).

Its purpose is to showcase serverless development in action by creating

a contact form for a website. We will see the development process from

start to finish, including the deployment of the platform and running

the application.

1.1 - Getting started

Let’s start building a sample application

Getting started

Build a sample Application

Imagine we have a static website and need server logic to store contacts

and validate data. This would require a server, a database and some code

to glue it all together. With a serverless approach, we can just

sprinkle little functions (that we call actions) on top of our static

website and let OpenServerless take care of the rest. No more setting up

VMs, backend web servers, databases, etc.

In this tutorial, we will see how you can take advantage of several

services which are already part of a OpenServerless deployment and

develop a contact form page for users to fill it with their emails and

messages, which are then sent via email to us and stored in a database.

Openserverless CLI: Ops

Serverless development is mostly performed on the CLI, and

OpenServerless has its tool called ops. It’s a command line tool that

allows you to deploy (and interact with) the platform seamlessly to the

cloud, locally and in custom environments.

Ops is cross-platform and can be installed on Windows, Linux and MacOS.

You can find the project and the sources on

Apache OpenServerless Cli Github page

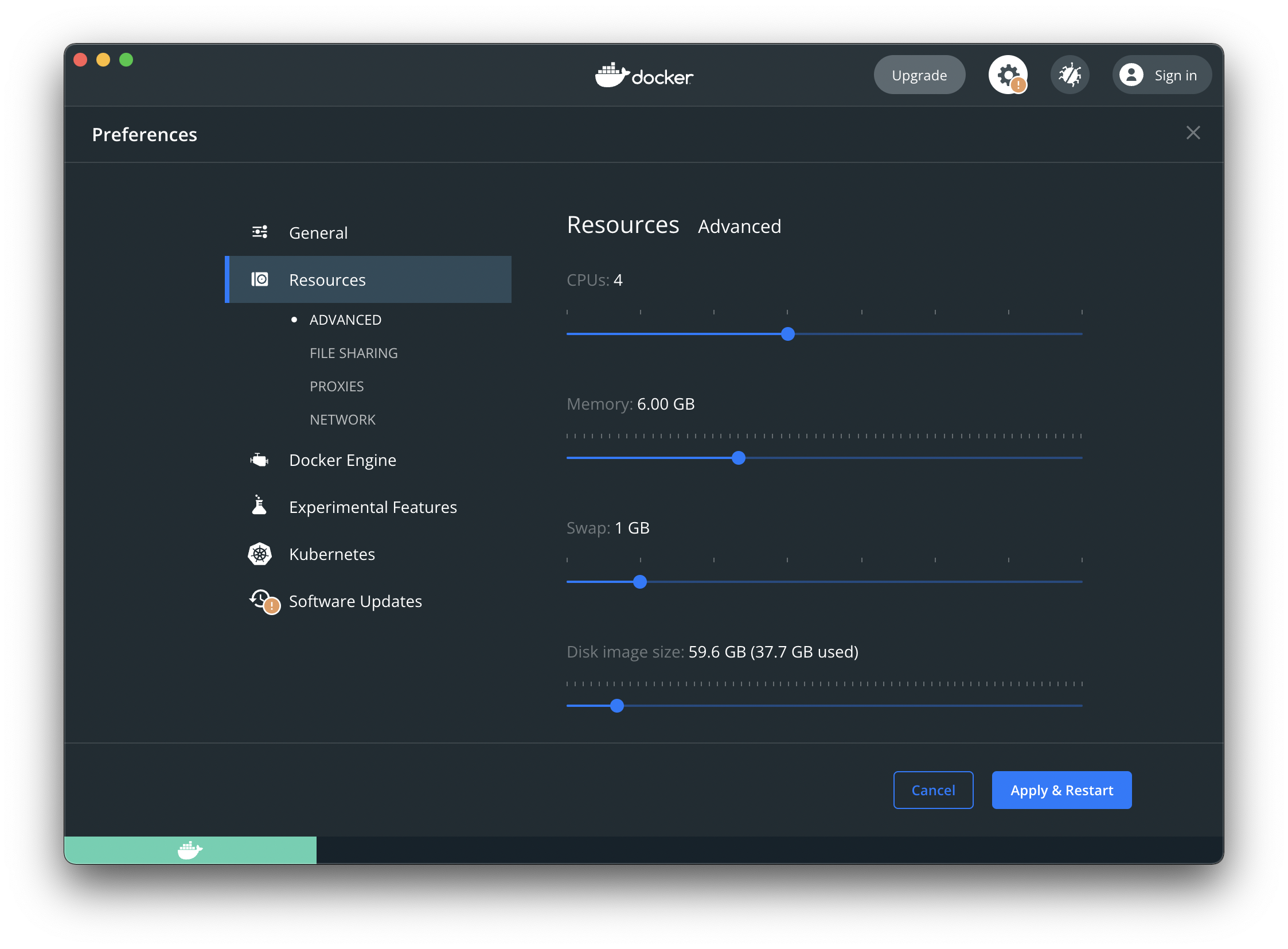

Deploy OpenServerless

To start using OpenServerless you can refer to the Installation

Guide. You can follow the local

installation to quickly get started with OpenServerless deployed on your

machine, or if you want to follow the tutorial on a deployment on cloud

you can pick one of the many supported cloud provider. Once installed

come back here!

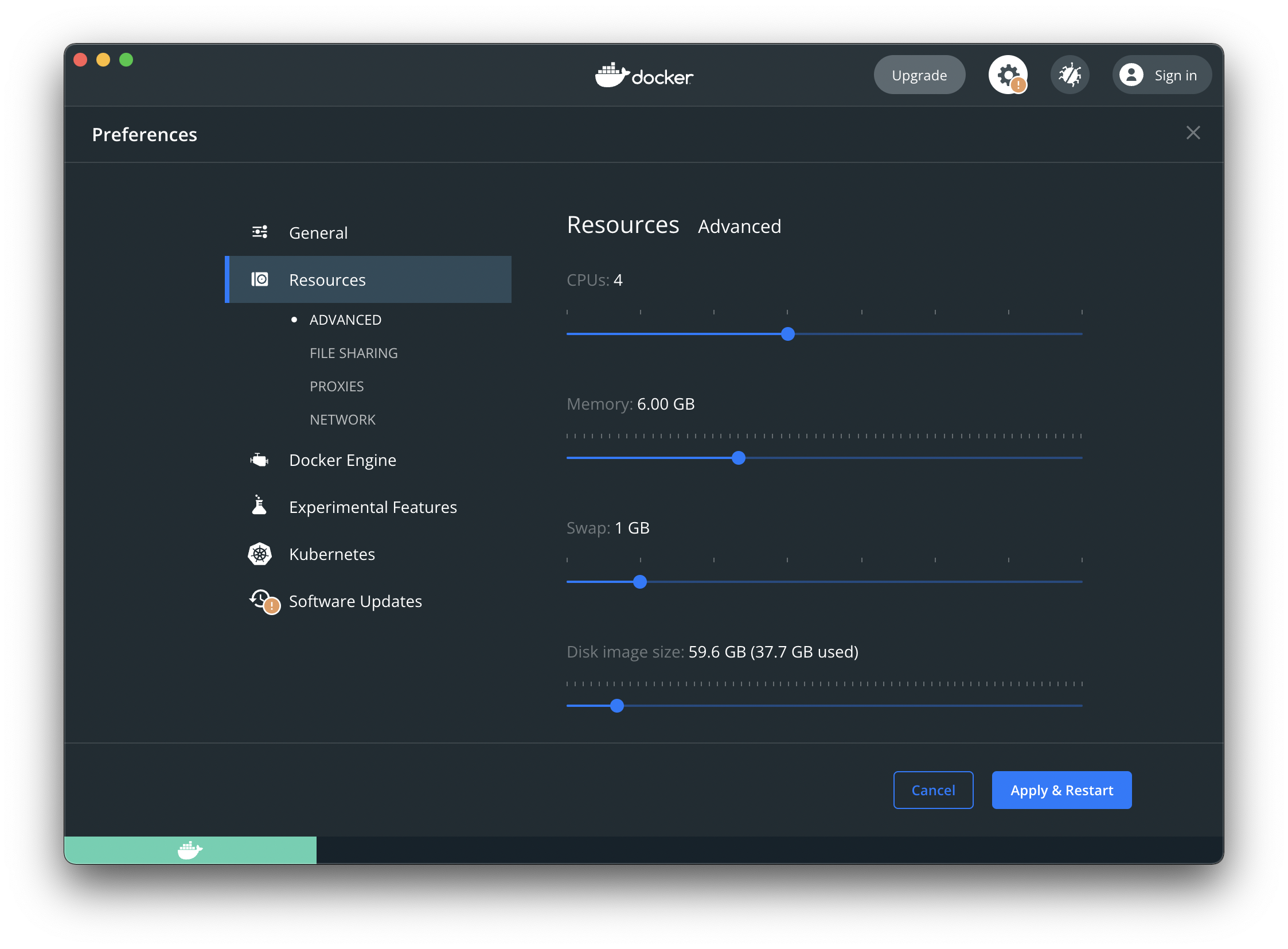

Enabling Services

After installing OpenServerless on a local machine with Docker or on a

supported cloud, you can enable or disable the services offered by the platform.

As we will use Postgres database, the Static content with the Minio S3 compatible

storage and a cron scheduler, let’s run in the terminal:

ops config enable --postgres --static --minio --cron

Since you should already have a deployment running, we have to update it

with the new services so they get deployed. Simply run:

And with just that (when it finishes), we have everything we need ready

to use!

Cleaning Up

Once you are done and want to clean the services configuration, just

run:

ops config disable --postgres --static --minio --cron

1.2 - First steps

Move your first steps on Apache Openserverless

First steps

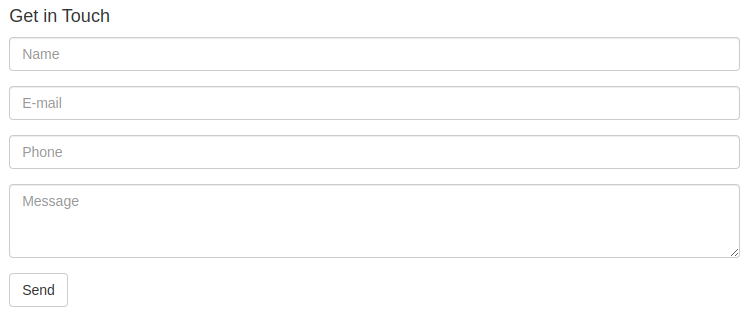

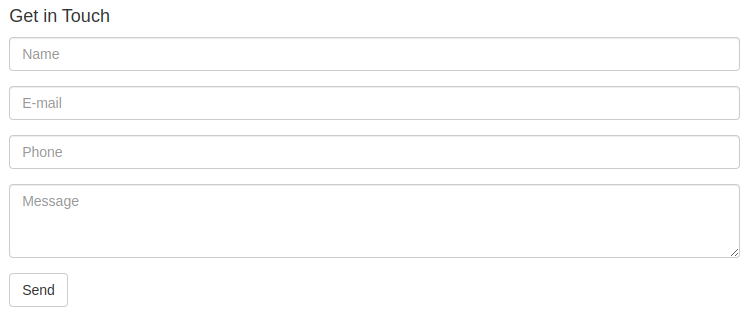

Starting at the Front

Right now, after a freshly installation, if we visit the <apihost> you

will see a very simple page with:

Welcome to OpenServerless static content distributor landing page!!!

That’s because we’ve activated the static content, and by default it

starts with this simple index.html page. We will instead have our own

index page that shows the users a contact form powered by OpenServerless

actions. Let’s write it now.

Let’s create a folder that will contain all of our app code:

contact_us_app.

Inside that create a new folder called web which will store our static

frontend, and add there a index.html file with the following:

<!DOCTYPE html>

<html>

<head>

<link href="//maxcdn.bootstrapcdn.com/bootstrap/3.3.0/css/bootstrap.min.css" rel="stylesheet" id="bootstrap-css">

</head>

<body>

<div id="container">

<div class="row">

<div class="col-md-8 col-md-offset-2">

<h4>Get in Touch</h4>

<form method="POST">

<div class="form-group">

<input type="text" name="name" class="form-control" placeholder="Name">

</div>

<div class="form-group">

<input type="email" name="email" class="form-control" placeholder="E-mail">

</div>

<div class="form-group">

<input type="tel" name="phone" class="form-control" placeholder="Phone">

</div>

<div class="form-group">

<textarea name="message" rows="3" class="form-control" placeholder="Message"></textarea>

</div>

<button class="btn btn-default" type="submit" name="button">

Send

</button>

</form>

</div>

</div>

</div>

</body>

</html>

Now we just have to upload it to our OpenServerless deployment. You

could upload it using something like curl with a PUT to where your

platform is deployed at, but there is an handy command that does it

automatically for all files in a folder:

Pass to ops web upload the path to folder where the index.html is

stored in (the web folder) and visit again <apihost>.

Now you should see the new index page:

The contact form we just uploaded does not do anything. To make it work

let’s start by creating a new package to hold our actions. Moreover, we

can bind to this package the database url, so the actions can directly

access it!

With the debug command you can see what’s going on in your deployment.

This time let’s use it to grab the “postgres_url” value:

ops -config -d | grep POSTGRES_URL

Copy the Postgres URL (something like postgresql://...). Now we can

create a new package for the application:

ops package create contact -p dbUri <postgres_url>

ok: created package contact

The actions under this package will be able to access the “dbUri”

variable from their args!

To follow the same structure for our action files, let’s create a folder

packages and inside another folder contact to give our actions a

nice, easy to find, home.

To manage and check out your packages, you can use the ops packages

subcommands.

ops package list

packages

/openserverless/contact private

/openserverless/hello private <-- a default package created during deployment

And to get specific information on a package:

ops package get contact

ok: got package contact

{

"namespace": "openserverless",

"name": "contact",

"version": "0.0.1",

"publish": false,

"parameters": [

{

"key": "dbUri",

"value": <postgres_url>

}

],

"binding": {},

"updated": 1696232618540

}

1.3 - Form validation

Learn how to add form validation from front to back-end

Now that we have a contact form and a package for our actions, we have

to handle the submission. We can do that by adding a new action that

will be called when the form is submitted. Let’s create a submit.js

file in our packages/contact folder.

function main(args) {

let message = []

let errors = []

// TODO: Form Validation

// TODO: Returning the Result

}

This action is a bit more complex. It takes the input object (called

args) which will contain the form data (accessible via args.name,

args.email, etc.). With that. we will do some validation and then

return the result.

Validation

Let’s start filling out the “Form Validation” part by checking the name:

// validate the name

if(args.name) {

message.push("name: "+args.name)

} else {

errors.push("No name provided")

}

Then the email by using a regular expression:

// validate the email

var re = /\S+@\S+\.\S+/;

if(args.email && re.test(args.email)) {

message.push("email: "+args.email)

} else {

errors.push("Email missing or incorrect.")

}

The phone, by checking that it’s at least 10 digits:

// validate the phone

if(args.phone && args.phone.match(/\d/g).length >= 10) {

message.push("phone: "+args.phone)

} else {

errors.push("Phone number missing or incorrect.")

}

Finally, the message text, if present:

// validate the message

if(args.message) {

message.push("message:" +args.message)

}

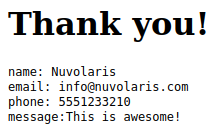

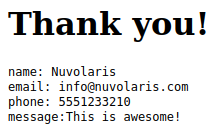

Submission

With the validation phase, we added to the “errors” array all the errors

we found, and to the “message” array all the data we want to show to the

user. So if there are errors, we have to show them, otherwise, we store

the message and return a “thank you” page.

// return the result

if(errors.length) {

var errs = "<ul><li>"+errors.join("</li><li>")+"</li></ul>"

return {

body: "<h1>Errors!</h1>"+

errs + '<br><a href="javascript:window.history.back()">Back</a>'

}

} else {

var data = "<pre>"+message.join("\n")+"</pre>"

return {

body: "<h1>Thank you!</h1>"+ data,

name: args.name,

email: args.email,

phone: args.phone,

message: args.message

}

}

Note how this action is returning HTML code. Actions can return a

{ body: <html> } kind of response and have their own url so they can

be invoked via a browser and display some content.

The HTML code to display is always returned in the body field, but we

can also return other stuff. In this case we added a a field for each of

the form fields. This gives us the possibility to invoke in a sequence

another action that can act just on those fields to store the data in

the database.

Let’s start deploying the action:

ops action create contact/submit submit.js --web true

ok: created action contact/submit

The --web true specifies it is a web action. We are creating a

submit action in the contact package, that’s why we are passing

contact/submit.

You can retrieve the url with:

ops url contact/submit

$ <apihost>/api/v1/web/openserverless/contact/submit

If you click on it you will see the Error page with a list of errors,

that’s because we just invoked the submit logic for the contact form

directly, without passing in any args. This is meant to be used via the

contact form page!

We need to wire it into the index.html. So let’s open it again and add a

couple of attributes to the form:

--- <form method="POST"> <-- old

+++ <form method="POST" action="/api/v1/web/openserverless/contact/submit"

enctype="application/x-www-form-urlencoded"> <-- new

Upload the web folder again with the new changes:

ops web upload web/

Now if you go to the contact form page the send button should work. It

will invoke the submit action which in turn will return some html.

If you fill it correctly, you should see the “Thank you” page.

Note how only the HTML from the body field is displayed, the other

fields are ignored in this case.

The ops action command can be used for many more things besides

creating actions. For example, you can use it to list all available

actions:

ops action list

actions

/openserverless/contact/submit private nodejs:18

And you can also get info on a specific action:

ops action get contact/submit

{

"namespace": "openserverless/contact",

"name": "submit",

"version": "0.0.1",

"exec": {

"kind": "nodejs:18",

"binary": false

},

...

}

These commands can come in handy when you need to debug your actions.

Here is the complete the submit.js action:

function main(args) {

let message = []

let errors = []

// validate the name

if (args.name) {

message.push("name: " + args.name)

} else {

errors.push("No name provided")

}

// validate the email

var re = /\S+@\S+\.\S+/;

if (args.email && re.test(args.email)) {

message.push("email: " + args.email)

} else {

errors.push("Email missing or incorrect.")

}

// validate the phone

if (args.phone && args.phone.match(/\d/g).length >= 10) {

message.push("phone: " + args.phone)

} else {

errors.push("Phone number missing or incorrect.")

}

// validate the message

if (args.message) {

message.push("message:" + args.message)

}

// return the result

if (errors.length) {

var errs = "<ul><li>" + errors.join("</li><li>") + "</li></ul>"

return {

body: "<h1>Errors!</h1>" +

errs + '<br><a href="javascript:window.history.back()">Back</a>'

}

} else {

var data = "<pre>" + message.join("\n") + "</pre>"

return {

body: "<h1>Thank you!</h1>" + data,

name: args.name,

email: args.email,

phone: args.phone,

message: args.message

}

}

}

1.4 - Use database

Store data into a relational database

Use database

Storing the Message in the Database

We are ready to use the database that we enabled at the beginning of the

tutorial.

Since we are using a relational database, we need to create a table to

store the contact data. We can do that by creating a new action called

create-table.js in the packages/contact folder:

const { Client } = require('pg')

async function main(args) {

const client = new Client({ connectionString: args.dbUri });

const createTable = `

CREATE TABLE IF NOT EXISTS contacts (

id serial PRIMARY KEY,

name varchar(50),

email varchar(50),

phone varchar(50),

message varchar(300)

);

`

// Connect to database server

await client.connect();

console.log('Connected to database');

try {

await client.query(createTable);

console.log('Contact table created');

} catch (e) {

console.log(e);

throw e;

} finally {

client.end();

}

}

We just need to run this once, therefore it doesn’t need to be a web

action. Here we can take advantage of the cron service we enabled!

There are also a couple of console logs that we can check out.

With the cron scheduler you can annotate an action with 2 kinds of

labels. One to make OpenServerless periodically invoke the action, the

other to automatically execute an action once, on creation.

Let’s create the action with the latter, which means annotating the

action with autoexec true:

ops action create contact/create-table create-table.js -a autoexec true

ok: created action contact/create-table

With -a you can add “annotations” to an action. OpenServerless will

invoke this action as soon as possible, so we can go on.

In OpenServerless an action invocation is called an activation. You

can keep track, retrieve information and check logs from an action with

ops activation. For example, with:

You can retrieve the list of invocations. For caching reasons the first

time you run the command the list might be empty. Just run it again and

you will see the latest invocations (probably some hello actions from

the deployment).

If we want to make sure create-table was invoked, we can do it with

this command. The cron scheduler can take up to 1 minute to run an

autoexec action, so let’s wait a bit and run ops activation list

again.

ops activation list

Datetime Activation ID Kind Start Duration Status Entity

2023-10-02 09:52:01 1f02d3ef5c32493682d3ef5c32b936da nodejs:18 cold 312ms success openserverless/create-table:0.0.1

..

Or we could run ops activation poll to listen for new logs.

ops activation poll

Enter Ctrl-c to exit.

Polling for activation logs

When the logs from the create-table action appear, we can stop the

command with Ctrl-c.

Each activation has an Activation ID which can be used with other

ops activation subcommands or with the ops logs command.

We can also check out the logs with either ops logs <activation-id> or

ops logs --last to quickly grab the last activation’s logs:

ops logs --last

2023-10-15T14:41:01.230674546Z stdout: Connected to database

2023-10-15T14:41:01.238457338Z stdout: Contact table created

The Action to Store the Data

We could just write the code to insert data into the table in the

submit.js action, but it’s better to have a separate action for that.

Let’s create a new file called write.js in the packages/contact

folder:

const { Client } = require('pg')

async function main(args) {

const client = new Client({ connectionString: args.dbUri });

// Connect to database server

await client.connect();

const { name, email, phone, message } = args;

try {

let res = await client.query(

'INSERT INTO contacts(name,email,phone,message) VALUES($1,$2,$3,$4)',

[name, email, phone, message]

);

console.log(res);

} catch (e) {

console.log(e);

throw e;

} finally {

client.end();

}

return {

body: args.body,

name,

email,

phone,

message

};

}

Very similar to the create table action, but this time we are inserting

data into the table by passing the values as parameters. There is also a

console.log on the response in case we want to check some logs again.

Let’s deploy it:

ops action create contact/write write.js

ok: created action contact/write

Finalizing the Submit

Alright, we are almost done. We just need to create a pipeline of

submit → write actions. The submit action returns the 4 form

fields together with the HTML body. The write action expects those 4

fields to store them. Let’s put them together into a sequence:

ops action create contact/submit-write --sequence contact/submit,contact/write --web true

ok: created action contact/submit-write

With this command we created a new action called submit-write that is

a sequence of submit and write. This means that OpenServerless will

call in a sequence submit first, then get its output and use it as

input to call write.

Now the pipeline is complete, and we can test it by submitting the form

again. This time the data will be stored in the database.

Note that write passes on the HTML body so we can still see the thank

you message. If we want to hide it, we can just remove the body

property from the return value of write. We are still returning the

other 4 fields, so another action can use them (spoiler: it will happen

next chapter).

Let’s check out again the action list:

ops action list

actions

/openserverless/contact/submit-write private sequence

/openserverless/contact/write private nodejs:18

/openserverless/contact/create-table private nodejs:18

/openserverless/contact/submit private nodejs:18

You probably have something similar. Note the submit-write is managed as

an action, but it’s actually a sequence of 2 actions. This is a very

powerful feature of OpenServerless, as it allows you to create complex

pipelines of actions that can be managed as a single unit.

Trying the Sequence

As before, we have to update our index.html to use the new action.

First let’s get the URL of the submit-write action:

ops url contact/submit-write

<apihost>/api/v1/web/openserverless/contact/submit-write

Then we can update the index.html file:

--- <form method="POST" action="/api/v1/web/openserverless/contact/submit"

enctype="application/x-www-form-urlencoded"> <-- old

+++ <form method="POST" action="/api/v1/web/openserverless/contact/submit-write"

enctype="application/x-www-form-urlencoded"> <-- new

We just need to add -write to the action name.

Try again to fill the contact form (with correct data) and submit it.

This time the data will be stored in the database.

If you want to retrive info from you database, ops provides several

utilities under the ops devel command. They are useful to interact

with the integrated services, such as the database we are using.

For instance, let’s run:

ops devel psql sql "SELECT * FROM CONTACTS"

[{'id': 1, 'name': 'OpenServerless', 'email': 'info@nuvolaris.io', 'phone': '5551233210', 'message': 'This is awesome!'}]

1.5 - Sending notifications

Sending notifications on user interaction

Sending notifications

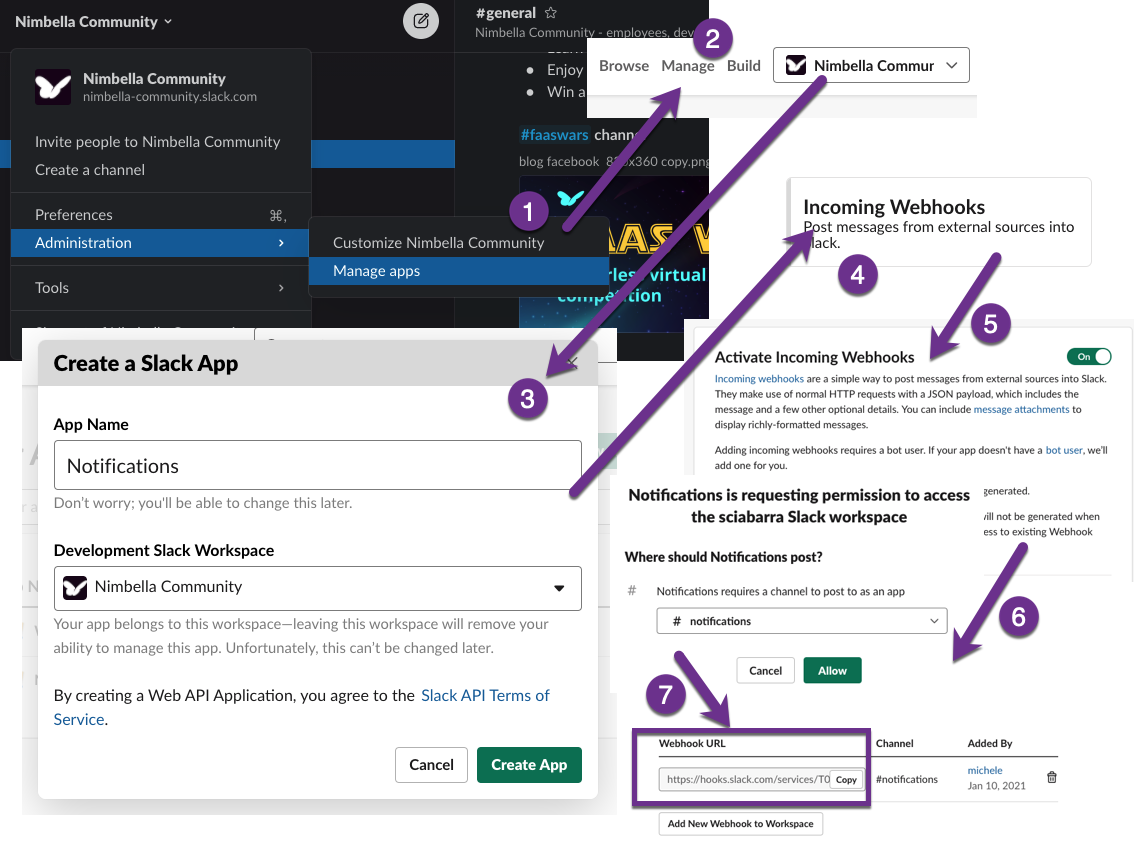

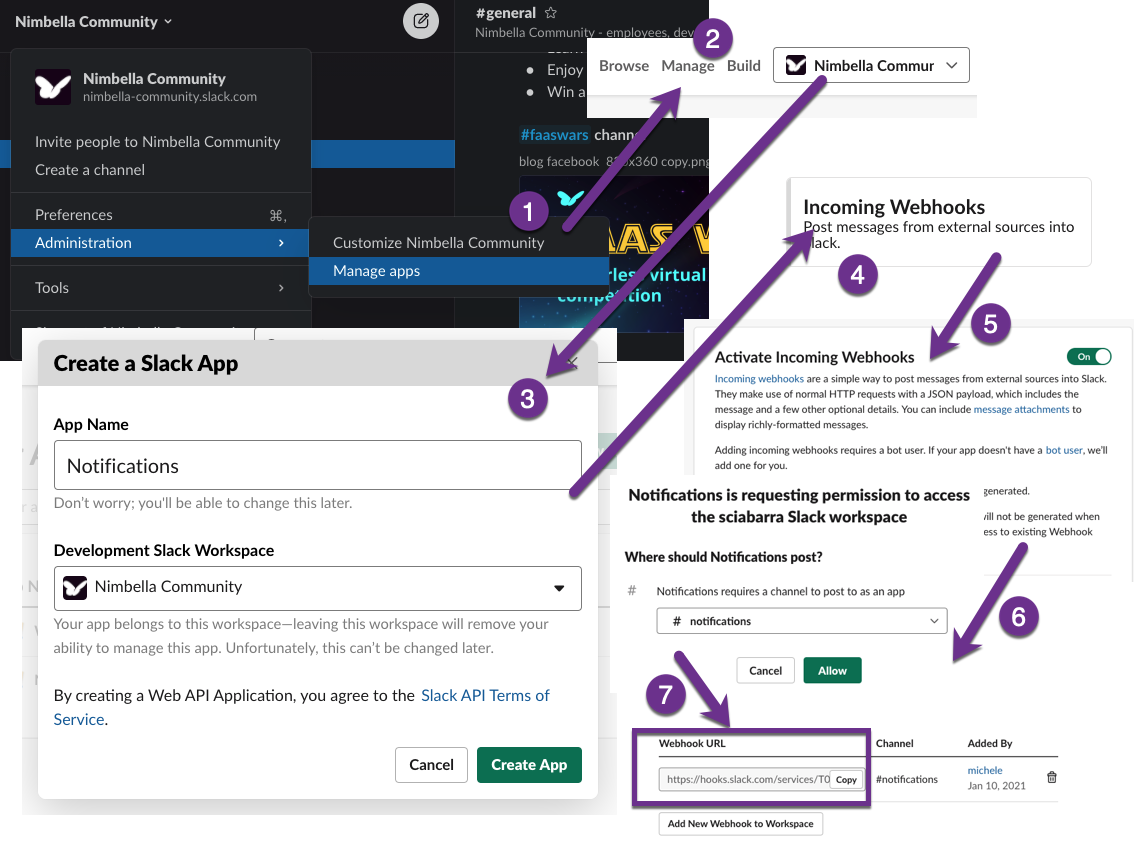

It would be great if we receive a notification when an user tries to

contact us. For this tutorial we will pick slack to receive a message

when it happens.

We need to:

have a slack workspace where we can send messages;

create a slack app that will be added to the workspace;

activate a webhook for the app that we can trigger from an action;

Check out the following scheme for the steps:

Once we have a webhook we can use to send messages we can proceed to

create a new action called notify.js (in the packages/contact

folder):

// notify.js

function main(args) {

const { name, email, phone, message } = args;

let text = `New contact request from ${name} (${email}, ${phone}):\n${message}`;

console.log("Built message", text);

return fetch(args.notifications, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({ text }),

})

.then(response => {

if (!response.ok) {

console.log("Error sending message. Status code:", response.status);

} else {

console.log("Message sent successfully");

}

return {

body: args.body,

};

})

.catch(error => {

console.log("Error sending message", error);

return {

body: error,

};

});

}

This action has the args.notifications parameter, which is the

webhook. It also has the usual 4 form fields parameters that receives in

input, used to build the text of the message. The action will return the

body of the response from the webhook.

We’ve also put some logs that we can use for debugging purposes.

Let’s first set up the action:

ops action create contact/notify notify.js -p notifications <your webhook>

ok: created action contact/notify

We are already setting the notifications parameter on action creation,

which is the webhook. The other one is the text that the submit action

will give in input at every invocation.

Creating Another Action Sequence

We have developed an action that can send a Slack message as a

standalone action, but we designed it to take the output of the submit

action and return it as is. Time to extend the previous sequence!

Note that it will send messages for every submission, even for incorrect

inputs, so we will know if someone is trying to use the form without

providing all the information. But we will only store the fully

validated data in the database.

Let’s create the sequence, and then test it:

ops action create contact/submit-notify --sequence contact/submit-write,contact/notify --web true

ok: created action contact/submit-notify

We just created a new sequence submit-notify from the previous

sequence submit-write and the new notify.

If you want to get more info about this sequence, you can use the

ops action get command:

ops action get contact/submit-notify

{

"namespace": "openserverless/contact",

"name": "submit-notify",

"version": "0.0.1",

"exec": {

"kind": "sequence",

"components": [

"/openserverless/contact/submit-write",

"/openserverless/contact/notify"

]

},

...

}

See how the exec key has a kind of sequence and a list of

components that are the actions that compose the sequence.

Now to start using this sequence instead of using the submit action, we

need to update the web/index.html page to invoke the new sequence.

As before let’s grab the url:

ops url contact/submit-notify

<apihost>/api/v1/web/openserverless/contact/submit-notify

And update the index.html:

--- <form method="POST" action="/api/v1/web/openserverless/contact/submit-write"

enctype="application/x-www-form-urlencoded"> <-- old

+++ <form method="POST" action="/api/v1/web/openserverless/contact/submit-notify"

enctype="application/x-www-form-urlencoded"> <-- new

Don’t forget to re-upload the web folder with ops web upload web/.

Now try to fill out the form again and press send! It will execute the

sequence and you will receive the message from your Slack App.

The tutorial introduced you to some utilities to retrieve information

and to the concept of activation. Let’s use some more commands to

check out the logs and see if the message was really sent.

The easiest way to check for all the activations that happen in this app

with all their logs is:

ops activation poll

Enter Ctrl-c to exit.

Polling for activation logs

This command polls continuously for log messages. If you go ahead and

submit a message in the app, all the actions will show up here together

with their log messages.

To also check if there are some problems with your actions, run a couple

of times ops activation list and check the Status of the

activations. If you see some developer error or any other errors, just

grab the activation ID and run ops logs <activation ID>.

1.6 - App Deployment

Learn how to deploy your app on Apache Openserverless

App Deployment

Packaging the App

With OpenServerless you can write a manifest file (in YAML) to have an

easy way to deploy applications.

In this last chapter of the tutorial we will package the code to easily

deploy the app, both frontend and actions.

Start The Manifest File

Let’s create a “manifest.yaml” file in the packages directory which

will be used to describe the actions to deploy:

packages:

contact:

actions:

notify:

function: contacts/notify.js

web: true

inputs:

notifications:

value: $NOTIFICATIONS

This is the basic manifest file with just the notify action. At the

top level we have the standard packages keyword, under which we can

define the packages we want. Until now we created all of our actions in

the contact package so we add it under packages.

Then under each package, the actions keyword is needed so we can add

our action custom names with the path to the code (with function).

Finally we also add web: true which is equivalent to --web true when

creating the action manually.

Finally we used the inputs keyword to define the parameters to inject

in the function.

If we apply this manifest file (we will see how soon), it will be the

same as the previous

ops action create contact/notify <path-to-notify.js> -p notifications $NOTIFICATIONS --web true.

You need to have the webhooks url in the NOTIFICATIONS environment

variable.

The Submit Action

The submit action is quite straightforward:

packages:

contact:

actions:

...

submit:

function: contact/submit.js

web: true

The Database Actions

Similarly to the notify and submit actions, let’s add to the

manifest file the two actions for the database. We also need to pass as

a package parameter the DB url, so we will use inputs key as before,

but at the package level:

packages:

contact:

inputs:

dbUri:

type: string

value: $POSTGRES_URL

actions:

...

write:

function: contact/write.js

web: true

create-table:

function: contact/create-table.js

annotations:

autoexec: true

Note the create-table action does not have the web set to true as it

is not needed to be exposed to the world. Instead it just has the

annotation for cron scheduler.

The Sequences

Lastly, we created a sequence with submit and notify that we have to

specify it in the manifest file as well.

packages:

contact:

inputs:

...

actions:

...

sequences:

submit-write:

actions: submit, write

web: true

submit-notify:

actions: submit-write, notify

web: true

We just have to add the sequences key at the contact level (next to

actions) and define the sequences we want with the available actions.

Deployment

The final version of the manifest file is:

packages:

contact:

inputs:

dbUri:

type: string

value: $POSTGRES_URL

actions:

notify:

function: contact/notify.js

web: true

inputs:

notifications:

value: $NOTIFICATIONS

submit:

function: contact/submit.js

web: true

write:

function: contact/write.js

web: true

create-table:

function: contact/create-table.js

annotations:

autoexec: true

sequences:

submit-write:

actions: submit, write

web: true

submit-notify:

actions: submit-write, notify

web: true

ops comes equipped with a handy command to deploy an app:

ops project deploy.

It checks if there is a packages folder with inside a manifest file

and deploys all the specified actions. Then it checks if there is a

web folder and uploads it to the platform.

It does all what we did manually until now in one command.

So, from the top level directory of our app, let’s run (to also set the

input env var):

export POSTGRES_URL=<your-postgres-url>

export NOTIFICATIONS=<the-webhook>

ops project deploy

Packages and web directory present.

Success: Deployment completed successfully.

Found web directory. Uploading..

With just this command you deployed all the actions (and sequences) and

uploaded the frontend (from the web folder).

2 - CLI

An handy command line to interact with all parts of OpenServerless

OpenServerless CLI

The ops command is the command line interface to OpenServerless

It let’s you to install and manipulate the components of the system.

If it is not already included in the development environment provided

you can download the CLI suitable for your platform from here, and

install it

Login into the system

To start working with you have to login in some OpenServerless

installation.

The administrator should have provided with username, password and the

URL to access the system.

For example, let’s assume you are the user mirella and the system is

available on https://nuvolaris.dev.

In order to login type the following command and enter you password.

ops -login https://nuvolaris.dev mirella

Enter Password:

If the password is correct you are logged in the system and you can use

the commands described below.

Next Steps

Once logged in, you can:

2.1 - Entities

The parts that OpenServerless applications are made of

Entities

OpenServerless applications are composed by some “entities” that you can

manipulate either using a command line interface or programmatically

with code.

The command line interface is the ops command line tools, that can be

used directly on the command line or automated through scripts. You can

also a REST API crafted explicitly for OpenServerless.

The entities available in OpenServerless are:

Packages: They serve as a means of

grouping actions together, facilitating the sharing of parameters,

annotations, etc. Additionally, they offer a base URL that can be

utilized by web applications.

Actions: These are the fundamental

components of a OpenServerless application, capable of being written

in any programming language. Actions accept input and produce

output, both formatted in JSON.

Activations: Each action invocations

produces an activation id that can be listed. Action output and

results logged and are associated to activations and can be

retrieved providing an activativation id.

Sequences: Actions can be

interconnected, where the output of one action serves as the input

for another, effectively forming a sequence.

Triggers: Serving as entry points with

distinct names, triggers are instrumental in activating multiple

actions.

Rules: Rules establish an association

between a trigger and an action. Consequently, when a trigger is

fired, all associated actions are invoked accordingly.

The ops command

Let’s now provide an overview of OpenServerless’ command line interface,

focusing on the ops command.

The command can be dowloaded in precompile binary format for many

platform following the Download button on https://www.nuvolaris.io/

The ops command is composed of many commands, each one with many

subcommands. The general format is:

ops <entity> <command> <parameters> <flags>

Note that <parameters> and <flags> are different for each

<command>, and for each <entity> there are many subcommands.

The CLI shows documention in the form of help output if you do not

provide enough parameters to it. Start with ops to get the list of the

main commands. If you type the ops <entity> get the help for that

entity, and so on.

For example, let’s see ops output (showing the command) and the more

frequently used command, action, also showing the more common

subcommands, shared with many others:

$ ops

Welcome to Ops, the all-mighty OpenServerless Build Tool

The top level commands all have subcommands.

Just type ops <command> to see its subcommands.

Commands:

action work with actions

activation work with activations

invoke shorthand for action invoke (-r is the default)

logs shorthand for activation logs

package work with packages

result shorthand for activation result

rule work with rules

trigger work with triggers

url get the url of a web action$ wsk action

There are many more sub commands used for aministrative purposes. In

this documentation we only focus on the subcommands used to manage the

main entities of OpenServerless.

Keep in mind that commands represent entities, and their subcommands

follow the CRUD model (Create, Retrieve via get/list, Update, Delete).

This serves as a helpful mnemonic to understand the ops command’s

functionality. While there are exceptions, these will be addressed

throughout the chapter’s discussion. Note however that some subcommand

may have some specific flags.

Naming Entities

Let’s see how entities are named.

Each user also has a namespace, and everything a user creates,

belongs to it.

The namespace is usually created by a system administrator.

Under a namespace you can create triggers, rules, actions and packages.

Those entities will have a name like this:

/mirella/demo-triggger

/mirella/demo-rule

/mirella/demo-package

/mirella/demo-action

When you create a package, you can put under it actions and feeds. Those

entities are named

💡 NOTE

In the commands you do not require to specify a namespace. If your user

is mirella, your namespace is /mirella, and You type demo-package

to mean /mirella/demo-package, and demo-package/demo-action to mean

/mirella/demo-package/demo-action.

2.1.1 - Packages

How to group actions and their related files

Packages

OpenServerless groups actions and feeds in packages under a

namespace. It is conceptually similar to a folder containing a group of

related files.

A package allows you to:

Group related actions together.

Share parameters and annotations (each action sees the parameters

assigned to the package).

Provide web actions with a common prefix in the URL to invoke them.

For example, we can create a package demo-package and assign a

parameter:

$ ops package create demo-package -p email no-reply@nuvolaris.io

ok: created package demo-package

This command creates a new package with the specified name.

Package Creation, Update, and Deletion

Let’s proceed with the commands to list, get information, update, and

finally delete a package:

First, let’s list our packages:

$ ops package list

packages

/openserverless/demo-package/ private

If you want to update a package by adding a parameter:

$ ops package update demo-package -p email info@nuvolaris.io

ok: updated package demo-package

Let’s retrieve some package information:

$ ops package get demo-package -s

package /openserverless/demo-package/sample:

(parameters: *email)

Note the final -s, which means “summarize.”

Finally, let’s delete a package:

$ ops package delete demo-package

ok: deleted package demo-package

Adding Actions to the Package

Actions can be added to a package using this command:

ops action create <package-name>/<action-name>

This associates an existing action with the specified package.

Using Packages

Once a package is created, actions within it can be invoked using their

full path, with this schema: <package-name>/<action-name>. This allows

organizing actions hierarchically and avoiding naming conflicts.

Conclusion

Packages in OpenServerless provide a flexible and organized way to

manage actions and their dependencies. Using the Ops CLI, you can

efficiently create, add actions, and manage package dependencies,

simplifying the development and management of serverless applications.

2.1.2 - Actions

Functions, the core of OpenServerless

Actions

An action can generally be considered as a function, a snippet of code,

or generally a method.

The ops action command is designed for managing actions, featuring

frequently utilized CRUD operations such as list, create, update, and

delete. We will illustrate these operations through examples using a

basic hello action. Let’s assume we have the following file in the

current directory:

The hello.js script with the following content:

function main(args) {

return { body: "Hello" }

}

Simple Action Deployment

If we want to deploy this simple action in the package demo, let’s

execute:

$ ops package update demo

ok: updated package demo

$ ops action update demo/hello hello.js

ok: update action demo/hello

Note that we ensured the package exists before creating the action.

We can actually omit the package name. In this case, the package name is

default, which always exists in a namespace. However, we advise always

placing actions in some named package.

💡 NOTE

We used update, but we could have used create if the action does not

exist because update also creates the action if it does not exist and

updates it if it is already there. Update here is similar to the patch

concept in REST API. However, create generates an error if an action

does not exist, while update does not, so it is practical to always

use update instead of create (unless we really want an error for an

existing action for some reason).

How to Invoke Actions

Let’s try to run the action:

$ ops invoke demo/hello

{

"body": "Hello"

}

Actually, the invoke command does not exist, or better, it’s just a

handy shortcut for ops action invoke -r.

If you try to run ops action invoke demo/hello, you get:

$ ops action invoke demo/hello

ok: invoked /_/demo/hello with id fec047bc81ff40bc8047bc81ff10bc85

You may wonder where the result is. In reality, in OpenServerless, all

actions are by default asynchronous, so what you usually get is the

activation id to retrieve the result once the action is completed.

To block the execution until the action is completed and get the result,

you can either use the flag -r or --result, or use ops invoke.

Note, however, that we are using ops to invoke an action, which means

all the requests are authenticated. You cannot invoke actions directly

without logging into the system first.

However, you can mark an action to be public by creating it with

--web true (see below).

Public Actions

If you want an action to be public, you can do:

$ ops action update demo/hello hello.js --web true

ok: updated action demo/hello

$ ops url demo/hello

https://nuvolaris.dev/api/v1/web/mirella/demo/hello

and you can invoke it with:

$ curl -sL https://nuvolaris.dev/api/v1/web/dashboard/demo/hello

Hello

Note that the output is only showing the value of the body field. This

is because the web actions must follow a pattern to produce an output

suitable for web output, so the output should be under the key body,

and so on. Check the section on Web Actions for more information.

💡 NOTE

Actually, ops url is a shortcut for ops action get --url. You can

use ops action get to retrieve a more detailed description of an

action in JSON format.

After action create, action update, and action get (and the

shortcuts invoke and url), we should mention action list and

action delete.

The action list command obviously lists actions and allows us to

delete them:

$ ops action list

/mirella/demo/hello private nodejs:18

$ ops action delete demo/hello

ok: deleted action demo/hello

Conclusion

Actions are a core part of our entities. A ops action is a

self-contained and executable unit of code deployed on the ops

serverless computing platform.

2.1.3 - Activations

Detailed records of action executions

Activations

When an event occurs that triggers a function, ops creates an activation

record, which contains information about the function execution, such as

input parameters, output results, and any metadata associated with the

activation. It’s something similar to the classic concept of log.

How activations work

When invoking an action with ops action invoke, you’ll receive only an

invocation id as an answer.

This invocation id allows you to read results and outputs produced by

the execution of an action.

Let’s demonstrate how it works by modifying the hello.js file to add a

command to log some output.

function main(args) {

console.log("Hello")

return { "body": "Hello" }

}

Now, let’s deploy and invoke it (with a parameter hello=world) to get

the activation id:

$ ops action update demo/hello hello.js

ok: updated action demo/hello

$ ops action invoke demo/hello

ok: invoked /_/demo/hello with id 0367e39ba7c74268a7e39ba7c7126846

Associated with every invocation, there is an activation id (in the

example, it is 0367e39ba7c74268a7e39ba7c7126846).

We use this id to retrieve the results of the invocation with

ops activation result or its shortcut, just ops result, and we can

retrieve the logs using ops activation logs or just ops logs.

$ ops result 0367e39ba7c74268a7e39ba7c7126846

{

"body": "Hello"

}

$ ops logs 0367e39ba7c74268a7e39ba7c7126846

2024-02-17T20:01:31.901124753Z stdout: Hello

List of activations

You can list the activations with ops activation list and limit the

number with --limit if you are interested in a subset.

$ ops activation list --limit 5

Datetime Activation ID Kind Start Duration Status Entity

2024-02-17 20:01:31 0367e39ba7c74268a7e39ba7c7126846 nodejs:18 warm 8ms success dashboard/hello:0.0.1

2024-02-17 20:00:00 f4f82ee713444028b82ee71344b0287d nodejs:18 warm 5ms success dashboard/hello:0.0.1

2024-02-17 19:59:54 98d19fe130da4e93919fe130da7e93cb nodejs:18 cold 33ms success dashboard/hello:0.0.1

2024-02-17 17:40:53 f25e1f8bc24f4f269e1f8bc24f1f2681 python:3 warm 3ms success dashboard/index:0.0.2

2024-02-17 17:35:12 bed3213547cc4aed93213547cc8aed8e python:3 warm 2ms success dashboard/index:0.0.2

Note also the --since option, which is useful to show activations from

a given timestamp (you can obtain a timestamp with date +%s).

Since it can be quite annoying to keep track of the activation id, there

are two useful alternatives.

With ops result --last and ops logs --last, you can retrieve just

the last result or log.

Polling activations

With ops activation poll, the CLI starts a loop and displays all the

activations as they happen.

$ ops activation poll

Enter Ctrl-c to exit.

Polling for activation logs

Conclusion

Activations provide a way to monitor and track the execution of

functions, enabling understanding of how code behaves in response to

different events and allowing for debugging and optimizing serverless

applications.

2.1.4 - Sequences

Combine actions in sequences

Sequences

You can combine actions into sequences and invoke them as a single

action. Therefore, a sequence represents a logical junction between two

or more actions, where each action is invoked in a specific order.

Combine actions sequentially

Suppose we want to describe an algorithm for preparing a pizza. We could

prepare everything in a single action, creating it all in one go, from

preparing the dough to adding all the ingredients and cooking it.

What if you would like to edit only a specific part of your algorithm,

like adding fresh tomato instead of classic, or reducing the amount of

water in your pizza dough? Every time, you have to edit your main action

to modify only a part.

Again, what if before returning a pizza you’d like to invoke a new

action like “add basil,” or if you decide to refrigerate the pizza dough

after preparing it but before cooking it?

This is where sequences come into play.

Create a file called preparePizzaDough.js

function main(args) {

let persons = args.howManyPerson;

let flour = persons * 180; // grams

let water = persons * 120; // ml

let yeast = (flour + water) * 0.02;

let pizzaDough =

"Mix " +

flour +

" grams of flour with " +

water +

" ml of water and add " +

yeast +

" grams of brewer's yeast";

return {

pizzaDough: pizzaDough,

whichPizza: args.whichPizza,

};

}

Now, in a file cookPizza.js

function main(args) {

let pizzaDough = args.pizzaDough;

let whichPizza = args.whichPizza;

let baseIngredients = "tomato and mozzarella";

if (whichPizza === "Margherita") {

return {

result:

"Cook " +

pizzaDough +

" topped with " +

baseIngredients +

" for 3 minutes at 380°C",

};

} else if (whichPizza === "Sausage") {

baseIngredients += "plus sausage";

return {

result:

"Cook " +

pizzaDough +

" topped with " +

baseIngredients +

". Cook for 3 minutes at 380°C",

};

}

}

We have now split our code to prepare pizza into two different actions.

When we need to edit only one action without editing everything, we can

do it! Otherwise, we can now add new actions that can be invoked or not

before cooking pizza (or after).

Let’s try it.

Testing the sequence

First, create our two actions

ops action create preparePizzaDough preparePizzaDough.js

ops action create cookPizza cookPizza.js

Now, we can create the sequence:

ops action create pizzaSequence --sequence preparePizzaDough,cookPizza

Finally, let’s invoke it

ops action invoke --result pizzaSequence -p howManyPerson 4 -p whichPizza "Margherita"

{

"result": "Cook Mix 720 grams of flour with 480 ml of water and add 24 grams of brewer's yeast topped with tomato and mozzarella for 3 minutes at 380°C"

}

Conclusion

Now, thanks to sequences, our code is split correctly, and we are able

to scale it more easily!

2.1.5 - Triggers

Event source that triggers an action execution

Triggers

Now let’s see what a trigger is and how to use it.

We can define a trigger as an object representing an event source

that triggers the execution of actions. When activated by an event,

associated actions are executed.

In other words, a trigger is a mechanism that listens for specific

events or conditions and initiates actions in response to those events.

It acts as the starting point for a workflow.

Example: Sending Slack Notifications

Let’s consider a scenario where we want to send Slack notifications when

users visit specific pages and submit a contact form.

Step 1: Define the Trigger

We create a trigger named “PageVisitTrigger” that listens for events

related to user visits on our website. To create it, you can use the

following command:

ops trigger create PageVisitTrigger

Once the trigger is created, you can update it to add parameters, such

as the page parameter:

ops trigger update PageVisitTrigger --param page homepage

💡 NOTE

Of course, there are not only create and update, but also delete,

and they work as expected, updating and deleting triggers. In the next

paragraph, we will also see the fire command, which requires you to

first create rules to do something useful.

Step 2: Associate the Trigger with an Action

Next, we create an action named “SendSlackNotification” that sends a

notification to Slack when invoked. Then, we associate this action with

our “PageVisitTrigger” trigger, specifying that it should be triggered

when users visit certain pages.

To associate the trigger with an action, you can use the following

command:

ops rule create TriggerRule PageVisitTrigger SendSlackNotification

We’ll have a better understanding of this aspect in

Rules

In this example, whenever a user visits either the homepage or the

contact page, the “SendSlackNotification” action will be triggered,

resulting in a Slack notification being sent.

Conclusion

Triggers provide a flexible and scalable way to automate workflows based

on various events. By defining triggers and associating them with

actions, you can create powerful applications that respond dynamically

to user interactions, system events, or any other specified conditions.

2.1.6 - Rules

Connection rules between triggers and actions

Rules

Once we have a trigger and some actions, we can create rules for the

trigger. A rule connects the trigger with an action, so if you fire the

trigger, it will invoke the action. Let’s see this in practice in the

next listing.

Create data

First of all, create a file called alert.js.

function main() {

console.log("Suspicious activity!");

return {

result: "Suspicious activity!"

};

}

Then, create a OpenServerless action for this file:

ops action create alert alert.js

Now, create a trigger that we’ll call notifyAlert:

ops trigger create notifyAlert

Now, all is ready, and now we can create our rule! The syntax follows

this pattern: “ops rule create {ruleName} {triggerName} {actionName}”.

ops rule create alertRule notifyAlert alert

Test your rule

Our environment can now be alerted if something suspicious occurs!

Before starting, let’s open another terminal window and enable polling

(with the command ops activation poll) to see what happens.

$ ops activation poll

Enter Ctrl-c to exit.

Polling for activation logs

It’s time to fire the trigger!

$ ops trigger fire notifyAlert

ok: triggered /notifyAlert with id 86b8d33f64b845f8b8d33f64b8f5f887

Now, go to see the result! Check the terminal where you are polling

activations now!

Enter Ctrl-c to exit.

Polling for activation logs

Activation: 'alert' (dfb43932d304483db43932d304383dcf)

[

"2024-02-20T03:15.15472494535Z stdout: Suspicious activity!"

]

Conclusion

💡 NOTE

As with all the other commands, you can execute list, update, and

delete by name.

A trigger can enable multiple rules, so firing one trigger actually

activates multiple actions. Rules can also be enabled and disabled

without removing them. As in the last example, let’s try to disable the

first rule and fire the trigger again to see what happens.

$ ops rule disable alertRule

ok: disabled rule alertRule

$ ops trigger fire notifyAlert

ok: triggered /_/notifyAlert with id 0f4fa69d910f4c738fa69d910f9c73af

In the activation polling window, we can see that no action is executed

now. Of course, we can enable the rule again with:

ops rule enable alertRule

2.2 - Administration

System administration

Administration

If you are the administrator and you have access to the Kubernetes

cluster where OpenServerless is

installed you can administer the

system.

You have access to the ops admin subcommand with the following

synopsis:

Subcommand: ops admin

Usage:

admin adduser <username> <email> <password> [--all] [--redis] [--mongodb] [--minio] [--postgres] [--storagequota=<quota>|auto]

admin deleteuser <username>

Commands:

admin adduser create a new user in OpenServerless with the username, email and password provided

admin deleteuser delete a user from the OpenServerless installation via the username provided

Options:

--all enable all services

--redis enable redis

--mongodb enable mongodb

--minio enable minio

--postgres enable postgres

--storagequota=<quota>

2.3 - Debugging

Utilities to troubleshoot OpenServerless’ cluster

The ops debug subcomand gives access to many useful debugging

utilities as follow:

You need access to the Kubernetes cluster where OpenServerless is

installed.

ops debug: available subcommands:

* apihost: show current apihost

* certs: show certificates

* config: show deployed configuration

* images: show current images

* ingress: show ingresses

* kube: kubernetes support subcommand prefix

* lb: show ingress load balancer

* log: show logs

* route: show openshift route

* runtimes: show runtimes

* status: show deployment status

* watch: watch nodes and pod deployment

* operator:version: show operator versions

The ops debug kube subcommand also gives detailed informations about

the underlying Kubernetes cluster:

ops debug kube: available subcommands:

* ctl: execute a kubectl command, specify with CMD=<command>

* detect: detect the kind of kubernetes we are using

* exec: exec bash in pod P=...

* info: show info

* nodes: show nodes

* ns: show namespaces

* operator: describe operator

* pod: show pods and related

* svc: show services, routes and ingresses

* users: show openserverless users custom resources

* wait: wait for a value matching the given jsonpath on the specific resources under the namespace openserverless

2.4 - Project

How to deal with OpenServerless projects

Project

An OpenServerless Project

⚠️ WARNING

This document is still 🚧 work in progress 🚧

A project represents a logical unit of functionality whose boundaries

are up to you. Your app can contain one or more projects. The folder

structure of a project determines how the deployer finds and labels

packages and actions, how it deploys static web content, and what it

ignores.

You can detect and load entire projects into OpenServerless with a

single command using the ops CLI tool.

Project Detection

When deploying a project, ops checks in the given path for 2 special

folders:

The packages folder: contains sub-folders that are treated as

OpenServerless packages and are assumed to contain actions in the

form of either files or folders, which we refer to as Single File

Actions (SFA) and Multi File Actions (MFA).

The web folder: contains static web content.

Anything else is ignored. This lets you store things in the root folder

that are not meant to be deployed on OpenServerless (such as build

folders and project documentation).

Single File Actions

A single file action is simply a file with specific extension (the

supported ones: .js .py .php .go .java), whici is directly deployed

as an action.

Multi File Actions

A multi-file action is a folder containing a main file and

dependencies. The folder is bundled into a zip file and deployed as an

action.

2.5 - Web Assets

How to handle frontend deployment

Upload Web Assets

The web folder in the root of a project is used to deploy static

frontends. A static front-end is a collection of static asset under a

given folder that will be published in a web server under a path.

Every uses has associated a web accessible static area where you can

upload static assets.

You can upload a folder in this web area with

ops web upload <folder>

Synopsis:

Subcommand: ops web

Commands to upload and manage static content.

Usage:

web upload <folder> [--quiet] [--clean]

Commands:

upload <folder> Uploads a folder to the web bucket in OpenServerless.

Options:

--quiet Do not print anything to stdout.

--clean Remove all files from the web bucket instead.

3 - Reference

OpenServerless Developer Guide

Welcome to OpenServerless Developer guide.

OpenServerless is based on Apache OpenWhisk

and the documentation in this section is derived for the official

OpenWhisk documentation.

In this sections we mostly document how to write actions

(functions), the building blocks of OpenWhisk and

OpenServerless applications. There are also a few related entities for

managing actions (packages, parameters etc) you also need to know.

You can write actions in a number of programming languages. OpenServerless

supports directly this list of programming

languages. The list is expanding over the time.

See below for documentation related to:

There is also a tutorial and a development

kit to build your own runtime for your

favorite programming language.

3.1 - Entities

In this section you can find more informations about OpenServerless and OpenWhisk entities.

3.1.1 - Actions

What Actions are and how to create and execute them

Actions

Actions are stateless functions that run on the OpenWhisk and

OpenServerless platform. For example, an action can be used to detect

the faces in an image, respond to a database change, respond to an API

call, or post a Tweet. In general, an action is invoked in response to

an event and produces some observable output.

An action may be created from a function programmed using a number of

supported languages and runtimes, or from a

binary-compatible executable.

While the actual function code will be specific to a language and

runtime, the operations to

create, invoke and manage an action are the same regardless of the

implementation choice.

We recommend that you review the cli and read

the tutorial before moving on to advanced

topics.

What you need to know about actions

Functions should be stateless, or idempotent. While the system

does not enforce this property, there is no guarantee that any state

maintained by an action will be available across invocations. In

some cases, deliberately leaking state across invocations may be

advantageous for performance, but also exposes some risks.

An action executes in a sandboxed environment, namely a container.

At any given time, a single activation will execute inside the

container. Subsequent invocations of the same action may reuse a

previous container, and there may exist more than one container at

any given time, each having its own state.

Invocations of an action are not ordered. If the user invokes an

action twice from the command line or the REST API, the second

invocation might run before the first. If the actions have side

effects, they might be observed in any order.

There is no guarantee that actions will execute atomically. Two

actions can run concurrently and their side effects can be

interleaved. OpenWhisk and OpenServerless does not ensure any

particular concurrent consistency model for side effects. Any

concurrency side effects will be implementation-dependent.

Actions have two phases: an initialization phase, and a run phase.

During initialization, the function is loaded and prepared for

execution. The run phase receives the action parameters provided at

invocation time. Initialization is skipped if an action is

dispatched to a previously initialized container — this is referred

to as a warm start. You can tell if an invocation was a warm

activation or a cold one requiring initialization by inspecting the

activation record.

An action runs for a bounded amount of time. This limit can be

configured per action, and applies to both the initialization and

the execution separately. If the action time limit is exceeded

during the initialization or run phase, the activation’s response

status is action developer error.

Accessing action metadata within the action body

The action environment contains several properties that are specific to

the running action. These allow the action to programmatically work with

OpenWhisk and OpenServerless assets via the REST API, or set an internal

alarm when the action is about to use up its allotted time budget. The

properties are accessible via the system environment for all supported

runtimes: Node.js, Python, Swift, Java and Docker actions when using the

OpenWhisk and OpenServerless Docker skeleton.

__OW_API_HOST the API host for the OpenWhisk and OpenServerless

deployment running this action.

__OW_API_KEY the API key for the subject invoking the action, this

key may be a restricted API key. This property is absent unless

requested with the annotation explicitly

provide-api-key

__OW_NAMESPACE the namespace for the activation (this may not be

the same as the namespace for the action).

__OW_ACTION_NAME the fully qualified name of the running action.

__OW_ACTION_VERSION the internal version number of the running

action.

__OW_ACTIVATION_ID the activation id for this running action

instance.

__OW_DEADLINE the approximate time when this action will have

consumed its entire duration quota (measured in epoch milliseconds).

3.1.2 - Web Actions

Actions annotated to quickly build web based applications

What web actions are

Web actions are OpenWhisk and OpenServerless actions annotated to quickly

enable you to build web based applications. This allows you to program

backend logic which your web application can access anonymously without

requiring an OpenWhisk and OpenServerless authentication key. It is up to the

action developer to implement their own desired authentication and

authorization (i.e.OAuth flow).

Web action activations will be associated with the user that created the

action. This actions defers the cost of an action activation from the

caller to the owner of the action.

Let’s take the following JavaScript action hello.js,

$ cat hello.js

function main({name}) {

var msg = 'you did not tell me who you are.';

if (name) {

msg = `hello ${name}!`

}

return {body: `<html><body><h3>${msg}</h3></body></html>`}

}

You may create a web action hello in the package demo for the

namespace guest using the CLI’s --web flag with a value of true or

yes:

$ ops package create demo

ok: created package demo

$ ops action create demo/hello hello.js --web true

ok: created action demo/hello

$ ops action get demo/hello --url

ok: got action hello

https://${APIHOST}/api/v1/web/guest/demo/hello

Using the --web flag with a value of true or yes allows an action

to be accessible via REST interface without the need for credentials. A

web action can be invoked using a URL that is structured as follows:

https://{APIHOST}/api/v1/web/{QUALIFIED ACTION NAME}.{EXT}`

The fully qualified name of an action consists of three parts: the

namespace, the package name, and the action name.

The fully qualified name of the action must include its package name,

which is default if the action is not in a named package.

An example is guest/demo/hello. The last part of the URI called the

extension which is typically .http although other values are

permitted as described later. The web action API path may be used with

curl or wget without an API key. It may even be entered directly in

your browser.

Try opening:

https://${APIHOST}/api/v1/web/guest/demo/hello.http?name=Jane

in your web browser. Or try invoking the action via curl:

curl https://${APIHOST}/api/v1/web/guest/demo/hello.http?name=Jane

Here is an example of a web action that performs an HTTP redirect:

function main() {

return {

headers: { location: 'http://openwhisk.org' },

statusCode: 302

}

}

Or sets a cookie:

function main() {

return {

headers: {

'Set-Cookie': 'UserID=Jane; Max-Age=3600; Version=',

'Content-Type': 'text/html'

},

statusCode: 200,

body: '<html><body><h3>hello</h3></body></html>' }

}

Or sets multiple cookies:

function main() {

return {

headers: {

'Set-Cookie': [

'UserID=Jane; Max-Age=3600; Version=',

'SessionID=asdfgh123456; Path = /'

],

'Content-Type': 'text/html'

},

statusCode: 200,

body: '<html><body><h3>hello</h3></body></html>' }

}

Or returns an image/png:

function main() {

let png = <base 64 encoded string>

return { headers: { 'Content-Type': 'image/png' },

statusCode: 200,

body: png };

}

Or returns application/json:

function main(params) {

return {

statusCode: 200,

headers: { 'Content-Type': 'application/json' },

body: params

};

}

The default content-type for an HTTP response is application/json and

the body may be any allowed JSON value. The default content-type may be

omitted from the headers.

It is important to be aware of the response size

limit for actions since a response that exceeds the

predefined system limits will fail. Large objects should not be sent

inline through OpenWhisk and OpenServerless, but instead deferred to an

object store, for example.

Handling HTTP requests with actions

An OpenWhisk and OpenServerless action that is not a web action requires both

authentication and must respond with a JSON object. In contrast, web

actions may be invoked without authentication, and may be used to

implement HTTP handlers that respond with headers, statusCode, and

body content of different types. The web action must still return a

JSON object, but the OpenWhisk and OpenServerless system (namely the

controller) will treat a web action differently if its result includes

one or more of the following as top level JSON properties:

headers: a JSON object where the keys are header-names and the

values are string, number, or boolean values for those headers

(default is no headers). To send multiple values for a single

header, the header’s value should be a JSON array of values.

statusCode: a valid HTTP status code (default is 200 OK if body is

not empty otherwise 204 No Content).

body: a string which is either plain text, JSON object or array,

or a base64 encoded string for binary data (default is empty

response).

The body is considered empty if it is null, the empty string "" or

undefined.

The controller will pass along the action-specified headers, if any, to

the HTTP client when terminating the request/response. Similarly the

controller will respond with the given status code when present. Lastly,

the body is passed along as the body of the response. If a

content-type header is not declared in the action result’s headers,

the body is interpreted as application/json for non-string values, and

text/html otherwise. When the content-type is defined, the

controller will determine if the response is binary data or plain text

and decode the string using a base64 decoder as needed. Should the body

fail to decoded correctly, an error is returned to the caller.

HTTP Context

All web actions, when invoked, receives additional HTTP request details

as parameters to the action input argument. They are:

__ow_method (type: string): the HTTP method of the request.

__ow_headers (type: map string to string): the request headers.

__ow_path (type: string): the unmatched path of the request

(matching stops after consuming the action extension).

__ow_user (type: string): the namespace identifying the OpenWhisk

and OpenServerless authenticated subject.

__ow_body (type: string): the request body entity, as a base64

encoded string when content is binary or JSON object/array, or plain

string otherwise.

__ow_query (type: string): the query parameters from the request

as an unparsed string.

A request may not override any of the named __ow_ parameters above;

doing so will result in a failed request with status equal to 400 Bad

Request.

The __ow_user is only present when the web action is annotated to

require authentication

and allows a web action to implement its own authorization policy. The

__ow_query is available only when a web action elects to handle the

“raw” HTTP request. It is a string containing the

query parameters parsed from the URI (separated by &). The __ow_body

property is present either when handling “raw” HTTP requests, or when

the HTTP request entity is not a JSON object or form data. Web actions

otherwise receive query and body parameters as first class properties in

the action arguments with body parameters taking precedence over query

parameters, which in turn take precedence over action and package

parameters.

Additional features

Web actions bring some additional features that include:

Content extensions: the request must specify its desired content

type as one of.json,.html,.http, .svg or .text. This is

done by adding an extension to the action name in the URI, so that

an action /guest/demo/hello is referenced as

/guest/demo/hello.http for example to receive an HTTP response

back. For convenience, the .http extension is assumed when no

extension is detected.

Query and body parameters as input: the action receives query

parameters as well as parameters in the request body. The precedence

order for merging parameters is: package parameters, binding

parameters, action parameters, query parameter, body parameters with

each of these overriding any previous values in case of overlap . As

an example /guest/demo/hello.http?name=Jane will pass the argument

{name: "Jane"} to the action.

Form data: in addition to the standard application/json, web

actions may receive URL encoded from data

application/x-www-form-urlencoded data as input.

Activation via multiple HTTP verbs: a web action may be invoked

via any of these HTTP methods: GET, POST, PUT, PATCH, and

DELETE, as well as HEAD and OPTIONS.

Non JSON body and raw HTTP entity handling: A web action may

accept an HTTP request body other than a JSON object, and may elect

to always receive such values as opaque values (plain text when not

binary, or base64 encoded string otherwise).

The example below briefly sketches how you might use these features in a

web action. Consider an action /guest/demo/hello with the following

body:

function main(params) {

return { response: params };

}

This is an example of invoking the web action using the .json

extension, indicating a JSON response.

$ curl https://${APIHOST}/api/v1/web/guest/demo/hello.json

{

"response": {

"__ow_method": "get",

"__ow_headers": {

"accept": "*/*",

"connection": "close",

"host": "172.17.0.1",

"user-agent": "curl/7.43.0"

},

"__ow_path": ""

}

}

You can supply query parameters.

$ curl https://${APIHOST}/api/v1/web/guest/demo/hello.json?name=Jane

{

"response": {

"name": "Jane",

"__ow_method": "get",

"__ow_headers": {

"accept": "*/*",

"connection": "close",

"host": "172.17.0.1",

"user-agent": "curl/7.43.0"

},

"__ow_path": ""

}

}

You may use form data as input.

$ curl https://${APIHOST}/api/v1/web/guest/demo/hello.json -d "name":"Jane"

{

"response": {

"name": "Jane",

"__ow_method": "post",

"__ow_headers": {

"accept": "*/*",

"connection": "close",

"content-length": "10",

"content-type": "application/x-www-form-urlencoded",

"host": "172.17.0.1",

"user-agent": "curl/7.43.0"

},

"__ow_path": ""

}

}

You may also invoke the action with a JSON object.

$ curl https://${APIHOST}/api/v1/web/guest/demo/hello.json -H 'Content-Type: application/json' -d '{"name":"Jane"}'

{

"response": {

"name": "Jane",

"__ow_method": "post",

"__ow_headers": {

"accept": "*/*",

"connection": "close",

"content-length": "15",

"content-type": "application/json",

"host": "172.17.0.1",

"user-agent": "curl/7.43.0"

},

"__ow_path": ""

}

}

You see above that for convenience, query parameters, form data, and

JSON object body entities are all treated as dictionaries, and their

values are directly accessible as action input properties. This is not

the case for web actions which opt to instead handle HTTP request

entities more directly, or when the web action receives an entity that

is not a JSON object.

Here is an example of using a “text” content-type with the same example

shown above.

$ curl https://${APIHOST}/api/v1/web/guest/demo/hello.json -H 'Content-Type: text/plain' -d "Jane"

{

"response": {

"__ow_method": "post",

"__ow_headers": {

"accept": "*/*",

"connection": "close",

"content-length": "4",

"content-type": "text/plain",

"host": "172.17.0.1",

"user-agent": "curl/7.43.0"

},

"__ow_path": "",

"__ow_body": "Jane"

}

}

Content extensions